Table of Contents

WIS2 in a box training

WIS2 in a box (wis2box) is a Free and Open Source (FOSS) Reference Implementation of a WMO WIS2 Node. The project provides a plug and play toolset to ingest, process, and publish weather/climate/water data using standards-based approaches in alignment with the WIS2 principles. wis2box also provides access to all data in the WIS2 network. wis2box is designed to have a low barrier to entry for data providers, providing enabling infrastructure and services for data discovery, access, and visualization.

This training provides step-by-step explanations of various aspects of the wis2box project as well as a number of exercises to help you publish and download data from WIS2. The training is provided in the form of overview presentations as well as hands-on practical exercises.

Participants will be able to work with sample test data and metadata, as well as integrate their own data and metadata.

This training covers a wide range of topics (install/setup/configuration, publishing/downloading data, etc.).

Goals and learning outcomes

The goals of this training are to become familiar with the following:

- WIS2 architecture core concepts and components

- data and metadata formats used in WIS2 for discovery and access

- wis2box architecture and environment

- wis2box core functions:

- metadata management

- data ingest and transformation to BUFR format

- MQTT broker for WIS2 message publishing

- HTTP endpoint for data download

- API endpoint for programmatic access to data

Navigation

The left hand navigation provides a table of contents for the entire training.

The right hand navigation provides a table of contents for a specific page.

Prerequisites

Knowledge

- Basic Linux commands (see the cheatsheet)

- Basic knowledge of networking and Internet protocols

Software

This training requires the following tools:

- An instance running Ubuntu OS (provided by WMO trainers during local training sessions) see Accessing your student VM

- SSH client to access your instance

- MQTT Explorer on your local machine

- SCP and SFTP client to copy files from your local machine

Conventions

Question

A section marked like this invites you to answer a question.

Also you will notice tips and notes sections within the text:

Tip

Tips share help on how to best achieve tasks.

Note

Notes provide additional information on the topic covered by the practical session, as well as how to best achieve tasks.

Examples are indicated as follows:

Configuration

1 2 3 4 | |

Snippets which need to be typed in a on a terminal/console are indicated as:

echo 'Hello world'

Container names (running images) are denoted in bold.

Training location and materials

The training contents, wiki and issue tracker are managed on GitHub at https://github.com/World-Meteorological-Organization/wis2box-training.

Printing the material

This training can be exported to PDF. To save or print this training material, go to the print page, and select File > Print > Save as PDF.

Exercise materials

Exercise materials can be downloaded from the exercise-materials.zip zipfile.

Support

For issues/bugs/suggestions or improvements/contributions to this training, please use the GitHub issue tracker.

All wis2box bugs, enhancements and issues can be reported on GitHub.

For additional support of questions, please contact wis2-support@wmo.int.

As always, wis2box core documentation can always be found at https://docs.wis2box.wis.wmo.int.

Contributions are always encouraged and welcome!

Practical sessions

Connecting to WIS2 over MQTT

Learning outcomes

By the end of this practical session, you will be able to:

- connect to the WIS2 Global Broker using MQTT Explorer

- review the WIS2 topic structure

- review the WIS2 notification message structure

Introduction

WIS2 uses the MQTT protocol to advertise the availability of weather/climate/water data. The WIS2 Global Broker subscribes to all WIS2 Nodes in the network and republishes the messages it receives. The Global Cache subscribes to the Global Broker, downloads the data in the message and then republishes the message on the cache topic with a new URL. The Global Discovery Catalogue publishes discovery metadata from the Broker and provides a search API.

This is an example of the WIS2 notification message structure for a message received on the topic origin/a/wis2/br-inmet/data/core/weather/surface-based-observations/synop:

{

"id": "59f9b013-c4b3-410a-a52d-fff18f3f1b47",

"type": "Feature",

"version": "v04",

"geometry": {

"coordinates": [

-38.69389,

-17.96472,

60

],

"type": "Point"

},

"properties": {

"data_id": "br-inmet/data/core/weather/surface-based-observations/synop/WIGOS_0-76-2-2900801000W83499_20240815T060000",

"datetime": "2024-08-15T06:00:00Z",

"pubtime": "2024-08-15T09:52:02Z",

"integrity": {

"method": "sha512",

"value": "TBuWycx/G0lIiTo47eFPBViGutxcIyk7eikppAKPc4aHgOmTIS5Wb9+0v3awMOyCgwpFhTruRRCVReMQMp5kYw=="

},

"content": {

"encoding": "base64",

"value": "QlVGUgAA+gQAABYAACsAAAAAAAIAHAAH6AgPBgAAAAALAAABgMGWx1AAAM0ABOIAAAODM0OTkAAAAAAAAAAAAAAKb5oKEpJ6YkJ6mAAAAAAAAAAAAAAAAv0QeYA29WQa87ZhH4CQP//z+P//BD////+ASznXuUb///8MgAS3/////8X///e+AP////AB/+R/yf////////////////////6/1/79H/3///gEt////////4BLP6QAf/+/pAB//4H0YJ/YeAh/f2///7TH/////9+j//f///////////////////v0f//////////////////////wNzc3Nw==",

"size": 250

},

"wigos_station_identifier": "0-76-2-2900801000W83499"

},

"links": [

{

"rel": "canonical",

"type": "application/bufr",

"href": "http://wis2bra.inmet.gov.br/data/2024-08-15/wis/br-inmet/data/core/weather/surface-based-observations/synop/WIGOS_0-76-2-2900801000W83499_20240815T060000.bufr4",

"length": 250

}

]

}

In this practical session you will learn how to use the MQTT Explorer tool to setup an MQTT client connection to a WIS2 Global Broker and be able to display WIS2 notification messages.

MQTT Explorer is a useful tool to browse and review the topic structure for a given MQTT broker to review data being published.

Note that MQTT is primarily used for "machine-to-machine" communication; meaning that there would normally be a client automatically parsing the messages as they are received. To work with MQTT programmatically (for example, in Python), you can use MQTT client libraries such as paho-mqtt to connect to an MQTT broker and process incoming messages. There exist numerous MQTT client and server software, depending on your requirements and technical environment.

Using MQTT Explorer to connect to the Global Broker

To view messages published by a WIS2 Global Broker you can "MQTT Explorer" which can be downloaded from the MQTT Explorer website.

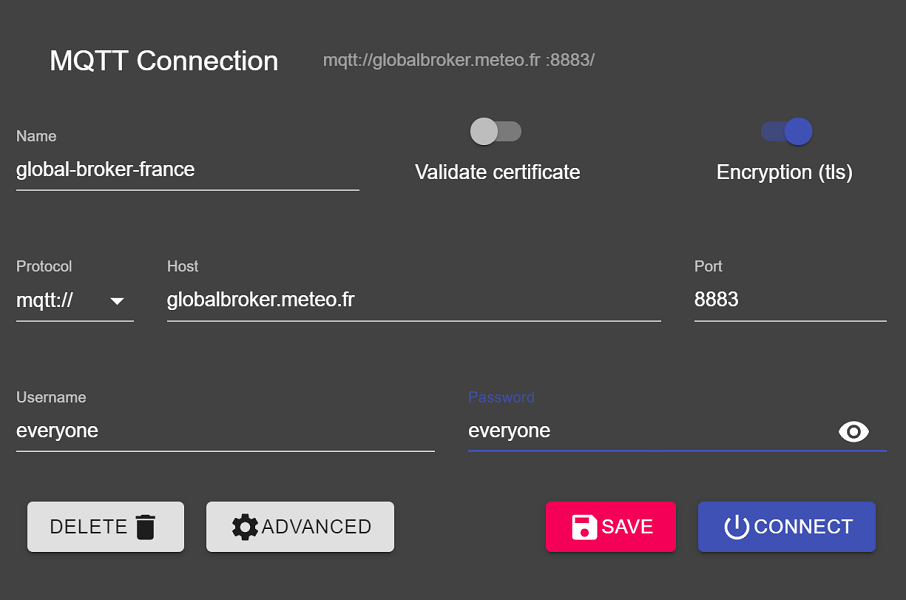

Open MQTT Explorer and add a new connection to the Global Broker hosted by MeteoFrance using the following details:

- host: globalbroker.meteo.fr

- port: 8883

- username: everyone

- password: everyone

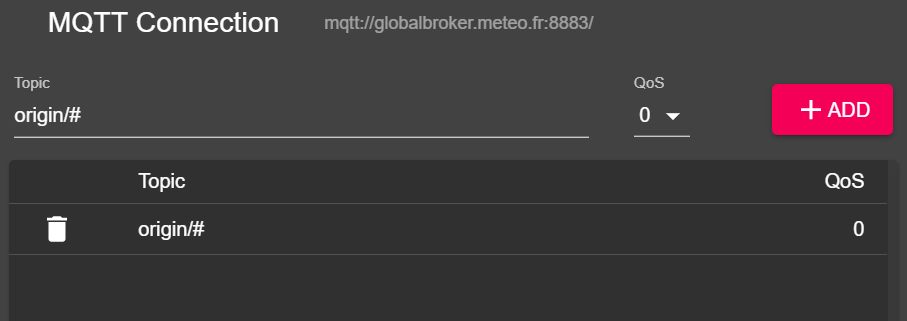

Click on the 'ADVANCED' button, remove the pre-configured topics and add the following topics to subscribe to:

origin/a/wis2/#

Note

When setting up MQTT subscriptions you can use the following wildcards:

- Single-level (+): a single-level wildcard replaces one topic level

- Multi-level (#): a multi-level wildcard replaces multiple topic levels

In this case origin/a/wis2/# will subscribe to all topics under the origin/a/wis2 topic.

Click 'BACK', then 'SAVE' to save your connection and subscription details. Then click 'CONNECT':

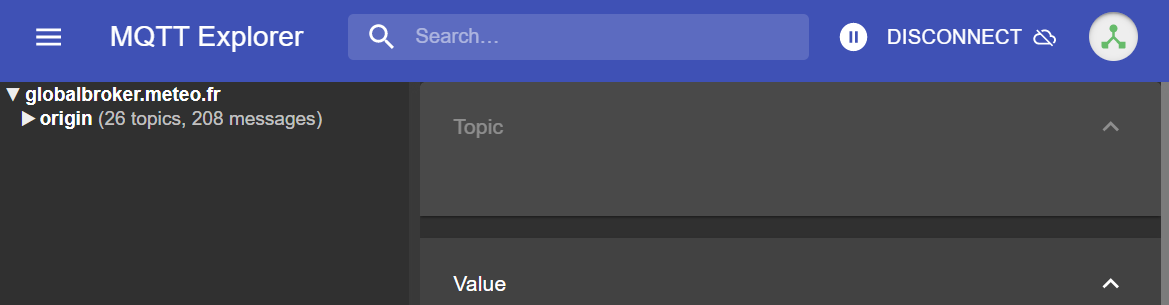

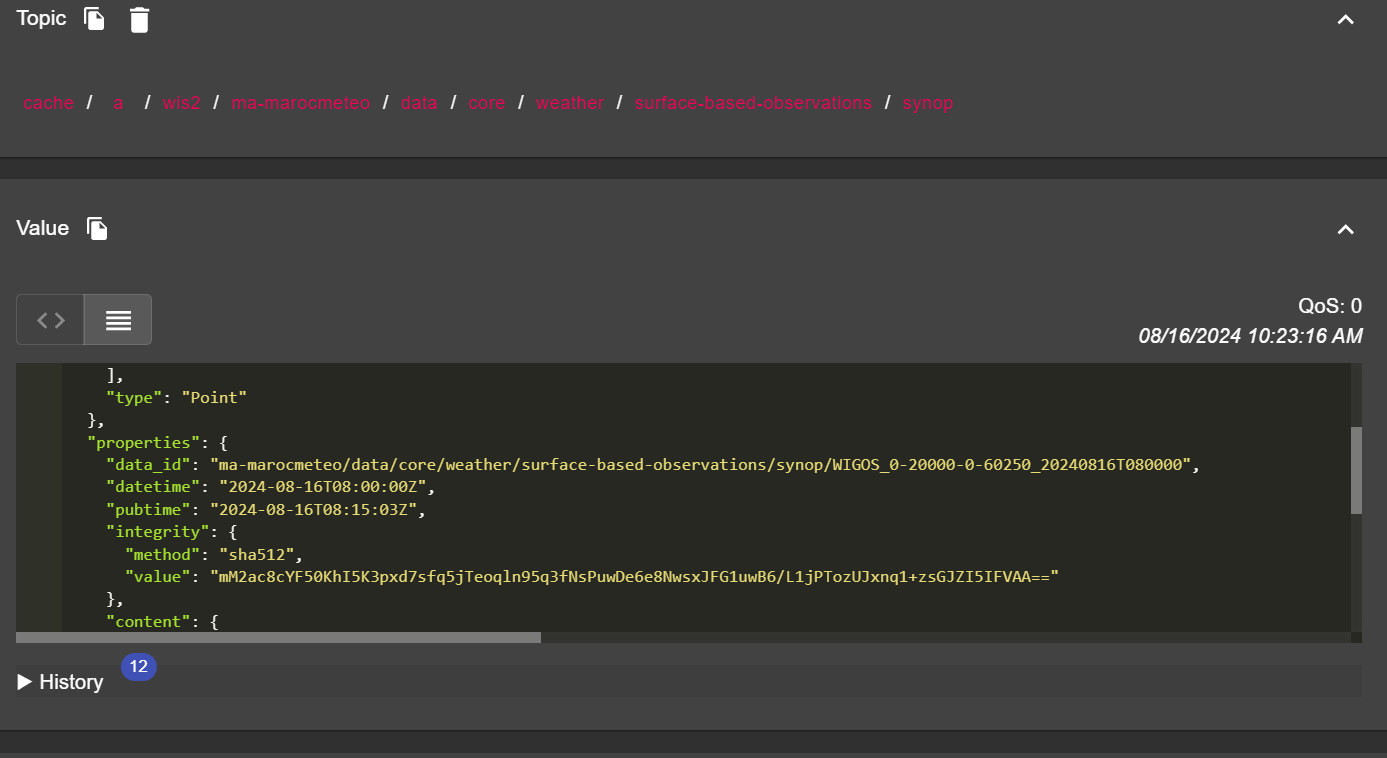

Messages should start appearing in your MQTT Explorer session as follows:

You are now ready to start exploring the WIS2 topics and message structure.

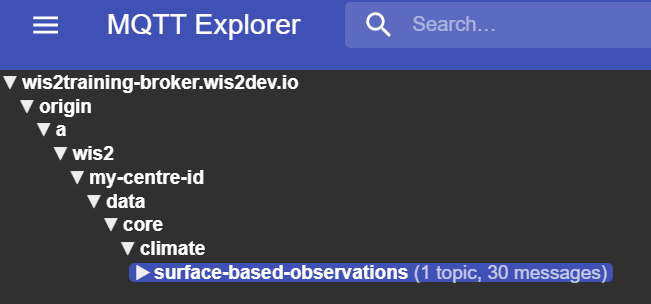

Exercise 1: Review the WIS2 topic structure

Use MQTT to browse topic structure under the origin topics.

Question

How can we distinguish the WIS centre that published the data?

Click to reveal answer

You can click on the left hand side window in MQTT Explorer to expand the topic structure.

We can distinguish the WIS centre that published the data by looking at the fourth level of the topic structure. For example, the following topic:

origin/a/wis2/br-inmet/data/core/weather/surface-based-observations/synop

tells us that the data was published a WIS centre with the centre-id br-inmet, which is the centre-id for Instituto Nacional de Meteorologia - INMET, Brazil.

Question

How can we distinguish between messages published by WIS-centres hosting a GTS-to-WIS2 gateway and messages published by WIS-centres hosting a WIS2 node?

Click to reveal answer

We can distinguish messages coming from GTS-to-WIS2 gateway by looking at the centre-id in the topic structure. For example, the following topic:

origin/a/wis2/de-dwd-gts-to-wis2/data/core/I/S/A/I/01/sbbr

tells us that the data was published by the GTS-to-WIS2 gateway hosted by Deutscher Wetterdienst (DWD), Germany. The GTS-to-WIS2 gateway is a special type of data-publisher that publishes data from the Global Telecommunication System (GTS) to WIS2. The topic structure is composed by the TTAAii CCCC headers for the GTS messages.

Exercise 2: Review the WIS2 message structure

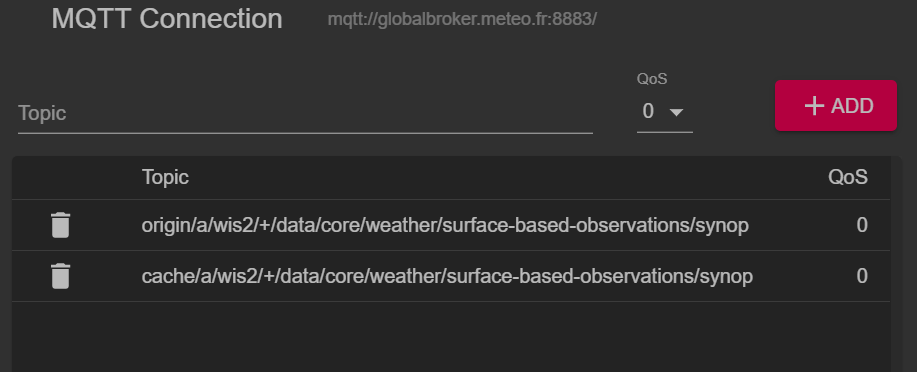

Disconnect from MQTT Explorer and update the 'Advanced' sections to change the subscription to the following:

origin/a/wis2/+/data/core/weather/surface-based-observations/synopcache/a/wis2/+/data/core/weather/surface-based-observations/synop

Note

The + wildcard is used to subscribe to all WIS-centres.

Reconnect to the Global Broker and wait for messages to appear.

You can view the content of the WIS2 message in the "Value" section on the right hand side. Try to expand the topic structure to see the different levels of the message until you reach the last level and review message content of one of the messages.

Question

How can we identify the timestamp that the data was published? And how can we identify the timestamp that the data was collected?

Click to reveal answer

The timestamp that the data was published is contained in the properties section of the message with a key of pubtime.

The timestamp that the data was collected is contained in the properties section of the message with a key of datetime.

Question

How can we download the data from the URL provided in the message?

Click to reveal answer

The URL is contained in the links section with rel="canonical" and defined by the href key.

You can copy the URL and paste it into a web browser to download the data.

Exercise 3: Review the difference between 'origin' and 'cache' topics

Make sure you are still connected to the Global Broker using the topic subscriptions origin/a/wis2/+/data/core/weather/surface-based-observations/synop and cache/a/wis2/+/data/core/weather/surface-based-observations/synop as described in Exercise 2.

Try to identify a message for the same centre-id published on both the origin and cache topics.

Question

What is the difference between the messages published on the origin and cache topics?

Click to reveal answer

The messages published on the origin topics are the original messages which the Global Broker republishes from the WIS2 Nodes in the network.

The messages published on the cache topics are the messages for data has been downloaded by the Global Cache. If you check the content of the message from the topic starting with cache, you will see that the 'canonical' link has been updated to a new URL.

There are multiple Global Caches in the WIS2 network, so you will receive one message from each Global Cache that has downloaded the message.

The Global Cache will only download and republish messages that were published on the ../data/core/... topic hierarchy.

Conclusion

Congratulations!

In this practical session, you learned:

- how to subscribe to WIS2 Global Broker services using MQTT Explorer

- the WIS2 topic structure

- the WIS2 notification message structure

- the difference between core and recommended data

- the topic structure used by the GTS-to-WIS2 gateway

- the difference between Global Broker messages published on the

originandcachetopics

Accessing your student VM

Learning outcomes

By the end of this practical session, you will be able to:

- access your student VM over SSH and WinSCP

- verify the required software for the practical exercises is installed

- verify you have access to exercise materials for this training on your local student VM

Introduction

As part of locally run wis2box training sessions, you can access your personal student VM on the local training network named "WIS2-training".

Your student VM has the following software pre-installed:

- Ubuntu 22.0.4.3 LTS ubuntu-22.04.3-live-server-amd64.iso

- Python 3.10.12

- Docker 24.0.6

- Docker Compose 2.21.0

- Text editors: vim, nano

Note

If you want to run this training outside of a local training session, you can provide your own instance using any cloud provider, for example:

- GCP (Google Cloud Platform) VM instance

e2-medium - AWS (Amazon Web Services) ec2-instance

t3a.medium - Azure (Microsoft) Azure Virtual Machine

standard_b2s

Select Ubuntu Server 22.0.4 LTS as OS.

After creating your VM ensure you have installed python, docker and docker compose, as described at wis2box-software-dependencies.

The release archive for wis2box used in this training can be downloaded as follows:

wget https://github.com/World-Meteorological-Organization/wis2box-release/releases/download/1.0.0/wis2box-setup.zip

unzip wis2box-setup.zip

You can always find the latest 'wis2box-setup' archive at https://github.com/World-Meteorological-Organization/wis2box/releases.

The exercise material used in this training can be downloaded as follows:

wget https://training.wis2box.wis.wmo.int/exercise-materials.zip

unzip exercise-materials.zip

The following additional Python packages are required to run the exercise materials:

pip3 install minio

pip3 install pywiscat==0.2.2

If you are using the student VM provided during local WIS2 training sessions, the required software will already be installed.

Connect to your student VM on the local training network

Connect your PC on the local Wi-Fi broadcasted in the room during WIS2 training as per the instructions provided by the trainer.

Use an SSH client to connect to your student VM using the following:

- Host: (provided during in-person training)

- Port: 22

- Username: (provided during in-person training)

- Password: (provided during in-person training)

Tip

Contact a trainer if you are unsure about the hostname/username or have issues connecting.

Once connected, please change your password to ensure others cannot access your VM:

limper@student-vm:~$ passwd

Changing password for testuser.

Current password:

New password:

Retype new password:

passwd: password updated successfully

Verify software versions

To be able to run wis2box, the student VM should have Python, Docker and Docker Compose pre-installed.

Check Python version:

python3 --version

Python 3.10.12

Check docker version:

docker --version

Docker version 24.0.6, build ed223bc

Check Docker Compose version:

docker compose version

Docker Compose version v2.21.0

To ensure your user can run Docker commands your user has been added to the docker group.

To test that your user can run docker hello-world, run the following command:

docker run hello-world

This should pull the hello-world image and run a container that prints a message.

Check that you see the following in the output:

...

Hello from Docker!

This message shows that your installation appears to be working correctly.

...

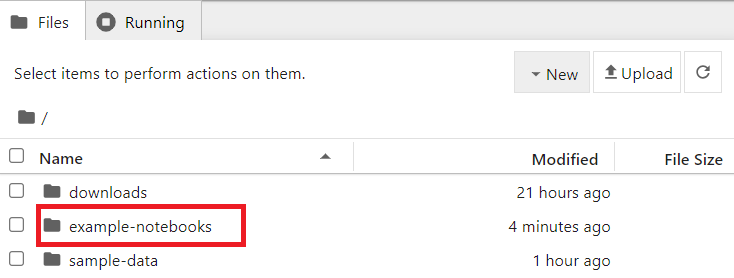

Inspect the exercise materials

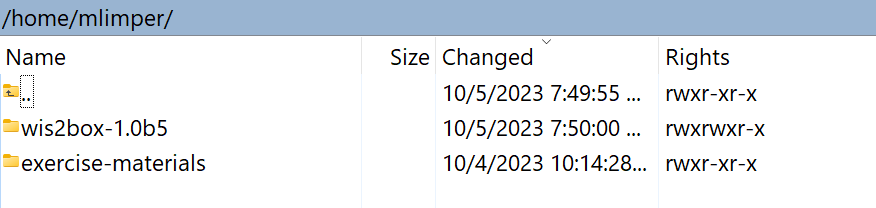

Inspect the contents of your home directory; these are the materials used as part of the training and practical sessions.

ls ~/

exercise-materials wis2box

If you have WinSCP installed on your local PC, you can use it to connect to your student VM and inspect the contents of your home directory and download or upload files between your VM and your local PC.

WinSCP is not required for the training, but it can be useful if you want to edit files on your VM using a text editor on your local PC.

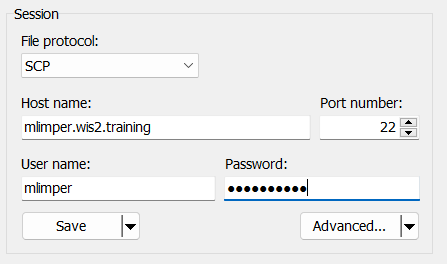

Here is how you can connect to your student VM using WinSCP:

Open WinSCP and click on the "New Site". You can create a new SCP connection to your VM as follows:

Click 'Save' and then 'Login' to connect to your VM.

And you should be able to see the following content:

Conclusion

Congratulations!

In this practical session, you learned how to:

- access your student VM over SSH and WinSCP

- verify the required software for the practical exercises is installed

- verify you have access to exercise materials for this training on your local student VM

Initializing wis2box

Learning outcomes

By the end of this practical session, you will be able to:

- run the

wis2box-create-config.pyscript to create the initial configuration - start wis2box and check the status of its components

- view the contents of the wis2box-api

- access the wis2box-webapp

- connect to the local wis2box-broker using MQTT Explorer

Note

The current training materials are based on wis2box-release 1.0.0.

See accessing-your-student-vm for instructions on how to download and install the wis2box software stack if you are running this training outside of a local training session.

Preparation

Login to your designated VM with your username and password and ensure you are in the wis2box directory:

cd ~/wis2box

Creating the initial configuration

The initial configuration for the wis2box requires:

- an environment file

wis2box.envcontaining the configuration parameters - a directory on the host-machine to share between the host machine and the wis2box containers defined by the

WIS2BOX_HOST_DATADIRenvironment variable

The wis2box-create-config.py script can be used to create the initial configuration of your wis2box.

It will ask you a set of question to help setup your configuration.

You will be able to review and update the configuration files after the script has completed.

Run the script as follows:

python3 wis2box-create-config.py

wis2box-host-data directory

The script will ask you to enter the directory to be used for the WIS2BOX_HOST_DATADIR environment variable.

Note that you need to define the full path to this directory.

For example if your username is username, the full path to the directory is /home/username/wis2box-data:

username@student-vm-username:~/wis2box$ python3 wis2box-create-config.py

Please enter the directory to be used for WIS2BOX_HOST_DATADIR:

/home/username/wis2box-data

The directory to be used for WIS2BOX_HOST_DATADIR will be set to:

/home/username/wis2box-data

Is this correct? (y/n/exit)

y

The directory /home/username/wis2box-data has been created.

wis2box URL

Next, you will be asked to enter the URL for your wis2box. This is the URL that will be used to access the wis2box web application, API and UI.

Please use http://<your-hostname-or-ip> as the URL.

Please enter the URL of the wis2box:

For local testing the URL is http://localhost

To enable remote access, the URL should point to the public IP address or domain name of the server hosting the wis2box.

http://username.wis2.training

The URL of the wis2box will be set to:

http://username.wis2.training

Is this correct? (y/n/exit)

WEBAPP, STORAGE and BROKER passwords

You can use the option of random password generation when prompted for and WIS2BOX_WEBAPP_PASSWORD, WIS2BOX_STORAGE_PASSWORD, WIS2BOX_BROKER_PASSWORD and define your own.

Don't worry about remembering these passwords, they will be stored in the wis2box.env file in your wis2box-directory.

Review wis2box.env

Once the scripts is completed check the contents of the wis2box.env file in your current directory:

cat ~/wis2box/wis2box.env

Or check the content of the file via WinSCP.

Question

What is the value of WISBOX_BASEMAP_URL in the wis2box.env file?

Click to reveal answer

The default value for WIS2BOX_BASEMAP_URL is https://{s}.tile.openstreetmap.org/{z}/{x}/{y}.png.

This URL refers to the OpenStreetMap tile server. If you want to use a different map provider, you can change this URL to point to a different tile server.

Question

What is the value of the WIS2BOX_STORAGE_DATA_RETENTION_DAYS environment variable in the wis2box.env file?

Click to reveal answer

The default value for WIS2BOX_STORAGE_DATA_RETENTION_DAYS is 30 days. You can change this value to a different number of days if you wish.

The wis2box-management container runs a cronjob on a daily basis to remove data older than the number of days defined by WIS2BOX_STORAGE_DATA_RETENTION_DAYS from the wis2box-public bucket and the API backend:

0 0 * * * su wis2box -c "wis2box data clean --days=$WIS2BOX_STORAGE_DATA_RETENTION_DAYS"

Note

The wis2box.env file contains environment variables defining the configuration of your wis2box. For more information consult the wis2box-documentation.

Do not edit the wis2box.env file unless you are sure of the changes you are making. Incorrect changes can cause your wis2box to stop working.

Do not share the contents of your wis2box.env file with anyone, as it contains sensitive information such as passwords.

Start wis2box

Ensure you are in the directory containing the wis2box software stack definition files:

cd ~/wis2box

Start wis2box with the following command:

python3 wis2box-ctl.py start

When running this command for the first time, you will see the following output:

No docker-compose.images-*.yml files found, creating one

Current version=Undefined, latest version=1.0.0

Would you like to update ? (y/n/exit)

Select y and the the script will create the file docker-compose.images-1.0.0.yml, download the required Docker images and start the services.

Downloading the images may take some time depending on your internet connection speed. This step is only required the first time you start wis2box.

Inspect the status with the following command:

python3 wis2box-ctl.py status

Repeat this command until all services are up and running.

wis2box and Docker

wis2box runs as a set of Docker containers managed by docker-compose.

The services are defined in the various docker-compose*.yml which can be found in the ~/wis2box/ directory.

The Python script wis2box-ctl.py is used to run the underlying Docker Compose commands that control the wis2box services.

You don't need to know the details of the Docker containers to run the wis2box software stack, but you can inspect the docker-compose*.yml and files to see how the services are defined. If you are interested in learning more about Docker, you can find more information in the Docker documentation.

To login to the wis2box-management container, use the following command:

python3 wis2box-ctl.py login

Inside the wis2box-management container you can run various commands to manage your wis2box, such as:

wis2box auth add-token --path processes/wis2box: to create an authorization token for theprocesses/wis2boxendpointwis2box data clean --days=<number-of-days>: to clean up data older than a certain number of days from thewis2box-publicbucket

To exit the container and go back to the host machine, use the following command:

exit

Run the following command to see the docker containers running on your host machine:

docker ps

You should see the following containers running:

- wis2box-management

- wis2box-api

- wis2box-minio

- wis2box-webapp

- wis2box-auth

- wis2box-ui

- wis2downloader

- elasticsearch

- elasticsearch-exporter

- nginx

- mosquitto

- prometheus

- grafana

- loki

These containers are part of the wis2box software stack and provide the various services required to run the wis2box.

Run the following command to see the docker volumes running on your host machine:

docker volume ls

You should see the following volumes:

- wis2box_project_auth-data

- wis2box_project_es-data

- wis2box_project_htpasswd

- wis2box_project_minio-data

- wis2box_project_prometheus-data

- wis2box_project_loki-data

- wis2box_project_mosquitto-config

As well as some anonymous volumes used by the various containers.

The volumes starting with wis2box_project_ are used to store persistent data for the various services in the wis2box software stack.

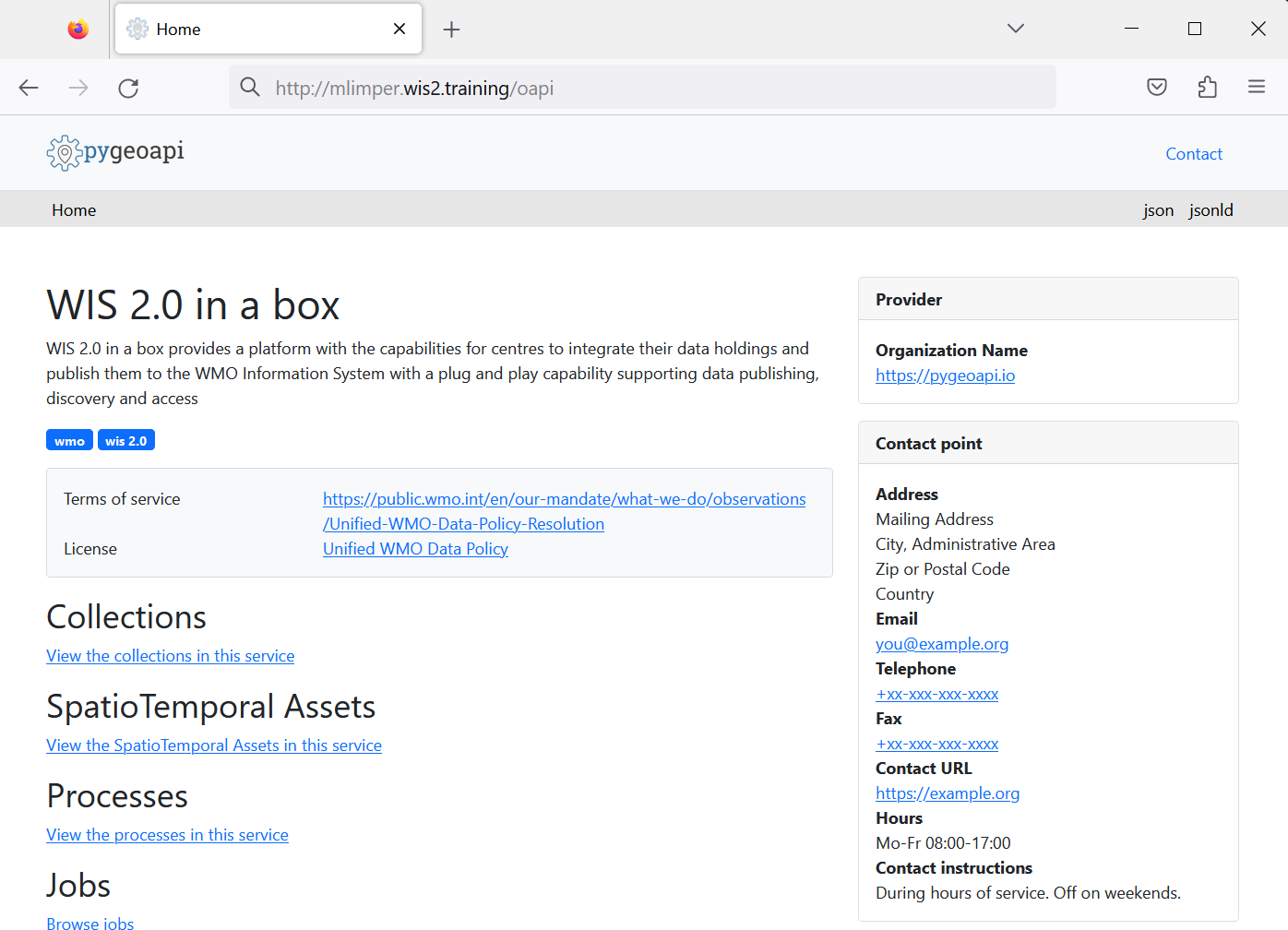

wis2box API

The wis2box contains an API (Application Programming Interface) that provide data access and processes for interactive visualization, data transformation and publication.

Open a new tab and navigate to the page http://YOUR-HOST/oapi.

This is the landing page of the wis2box API (running via the wis2box-api container).

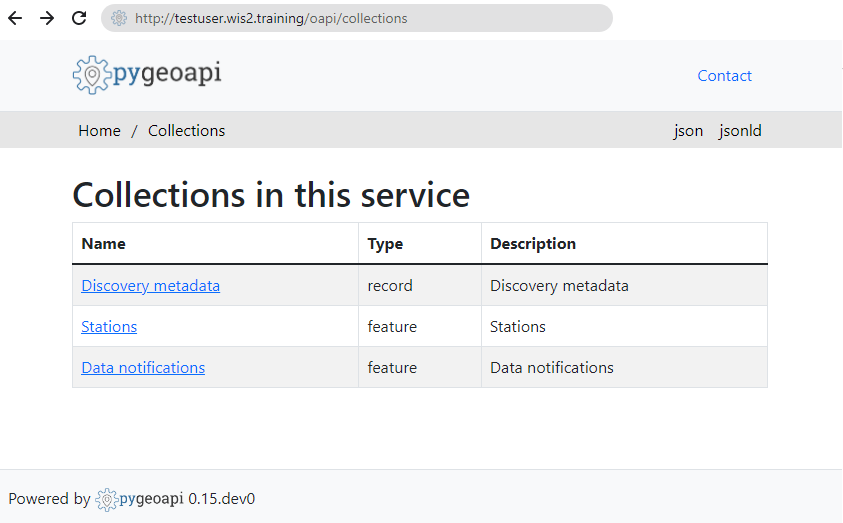

Question

What collections are currently available?

Click to reveal answer

To view collections currently available through the API, click View the collections in this service:

The following collections are currently available:

- Stations

- Data notifications

- Discovery metadata

Question

How many data notifications have been published?

Click to reveal answer

Click on "Data notifications", then click on Browse through the items of "Data Notifications".

You will note that the page says "No items" as no Data notifications have been published yet.

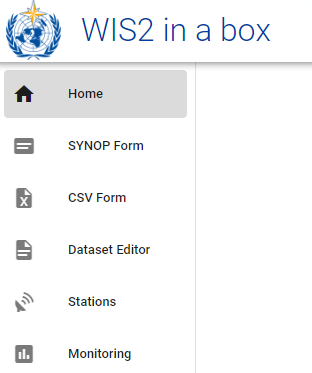

wis2box webapp

Open a web browser and visit the page http://YOUR-HOST/wis2box-webapp.

You will see a pop-up asking for your username and password. Use the default username wis2box-user and the WIS2BOX_WEBAPP_PASSWORD defined in the wis2box.env file and click "Sign in":

Note

Check you wis2box.env for the value of your WIS2BOX_WEBAPP_PASSWORD. You can use the following command to check the value of this environment variable:

cat ~/wis2box/wis2box.env | grep WIS2BOX_WEBAPP_PASSWORD

Once logged in, you move your mouse to the menu on the left to see the options available in the wis2box web application:

This is the wis2box web application to enable you to interact with your wis2box:

- create and manage datasets

- update/review your station metadata

- upload manual observations using FM-12 synop form

- monitor notifications published on your wis2box-broker

We will use this web application in a later session.

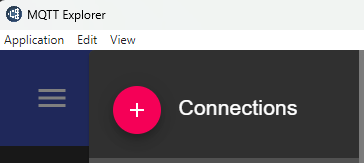

wis2box-broker

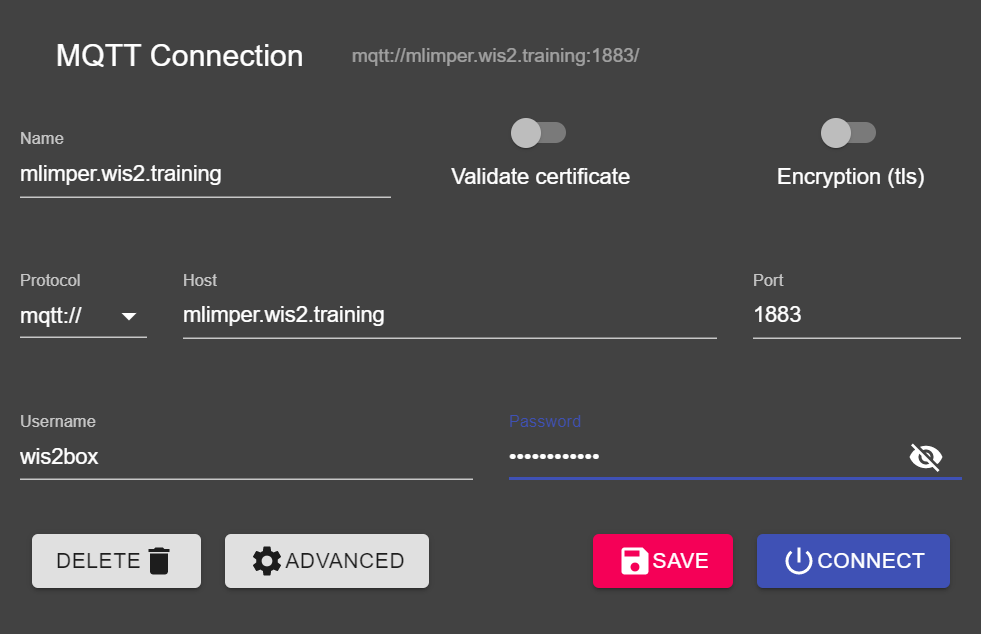

Open the MQTT Explorer on your computer and prepare a new connection to connect to your broker (running via the wis2box-broker container).

Click + to add a new connection:

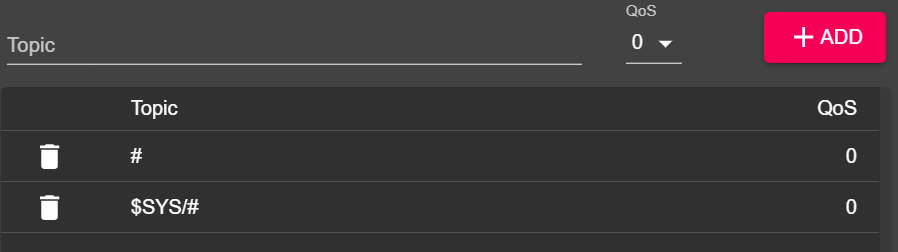

You can click on the 'ADVANCED' button and verify you have subscriptions to the the following topics:

#$SYS/#

Note

The # topic is a wildcard subscription that will subscribe to all topics published on the broker.

The messages published under the $SYS topic are system messages published by the mosquitto service itself.

Use the following connection details, making sure to replace the value of <your-host> with your hostname and <WIS2BOX_BROKER_PASSWORD> with the value from your wis2box.env file:

- Protocol: mqtt://

- Host:

<your-host> - Port: 1883

- Username: wis2box

- Password:

<WIS2BOX_BROKER_PASSWORD>

Note

You can check your wis2box.env for the value of your WIS2BOX_BROKER_PASSWORD. You can use the following command to check the value of this environment variable:

cat ~/wis2box/wis2box.env | grep WIS2BOX_BROKER_PASSWORD

Note that this your internal broker password, the Global Broker will use different (read-only) credentials to subscribe to your broker. Never share this password with anyone.

Make sure to click "SAVE" to store your connection details.

Then click "CONNECT" to connect to your wis2box-broker.

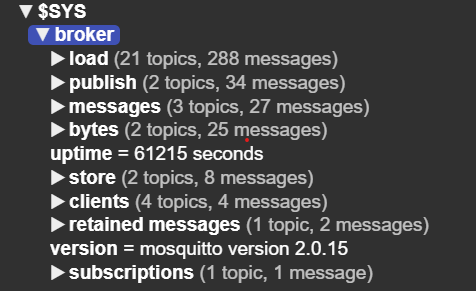

Once you are connected, verify that your the internal mosquitto statistics being published by your broker under the $SYS topic:

Keep the MQTT Explorer open, as we will use it to monitor the messages published on the broker.

Conclusion

Congratulations!

In this practical session, you learned how to:

- run the

wis2box-create-config.pyscript to create the initial configuration - start wis2box and check the status of its components

- access the wis2box-webapp and wis2box-API in a browser

- connect to the MQTT broker on your student VM using MQTT Explorer

Configuring datasets in wis2box

Learning outcomes

By the end of this practical session, you will be able to:

- create a new dataset

- create discovery metadata for a dataset

- configure data mappings for a dataset

- publish a WIS2 notification with a WCMP2 record

- update and re-publish your dataset

Introduction

wis2box uses datasets that are associated with discovery metadata and data mappings.

Discovery metadata is used to create a WCMP2 (WMO Core Metadata Profile 2) record that is shared using a WIS2 notification published on your wis2box-broker.

The data mappings are used to associate a data plugin to your input data, allowing your data to be transformed prior to being published using the WIS2 notification.

This session will walk you through creating a new dataset, creating discovery metadata, and configuring data mappings. You will inspect your dataset in the wis2box-api and review the WIS2 notification for your discovery metadata.

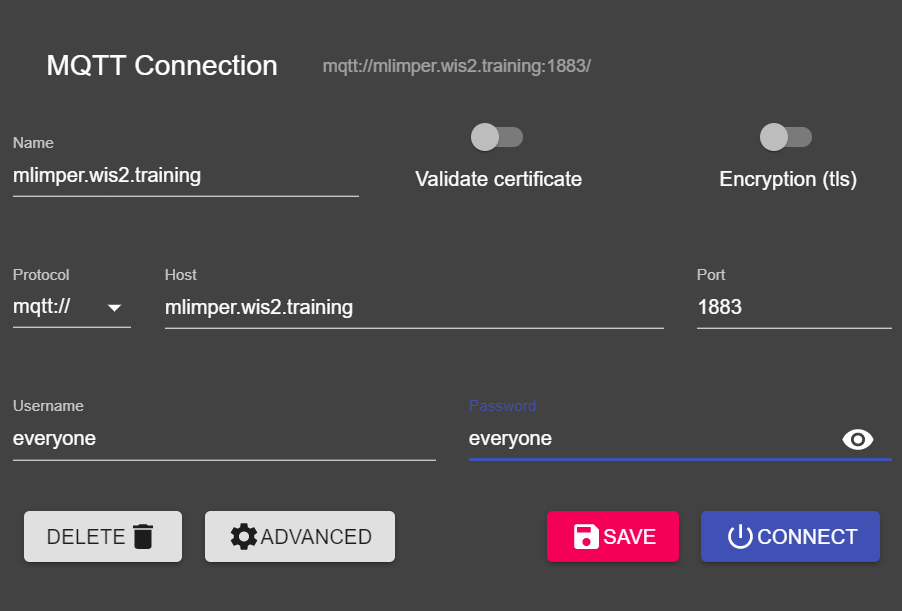

Preparation

Connect to your broker using MQTT Explorer.

Instead of using your internal broker credentials, use the public credentials everyone/everyone:

Note

You never need to share the credentials of your internal broker with external users. The 'everyone' user is a public user to enable sharing of WIS2 notifications.

The everyone/everyone credentials has read-only access on the topic 'origin/a/wis2/#'. This is the topic where the WIS2 notifications are published. The Global Broker can subscribe with these public credentials to receive the notifications.

The 'everyone' user will not see internal topics or be able to publish messages.

Open a browser and open a page to http://YOUR-HOST/wis2box-webapp. Make sure you are logged in and can access the 'dataset editor' page.

See the section on Initializing wis2box if you need to remember how to connect to the broker or access the wis2box-webapp.

Create an authorization token for processes/wis2box

You will need an authorization token for the 'processes/wis2box' endpoint to publish your dataset.

To create an authorization token, access your training VM over SSH and use the following commands to login to the wis2box-management container:

cd ~/wis2box

python3 wis2box-ctl.py login

Then run the following command to create a randomly generated authorization token for the 'processes/wis2box' endpoint:

wis2box auth add-token --path processes/wis2box

You can also create a token with a specific value by providing the token as an argument to the command:

wis2box auth add-token --path processes/wis2box MyS3cretToken

Make sure to copy the token value and store it on your local machine, as you will need it later.

Once you have your token, you can exit the wis2box-management container:

exit

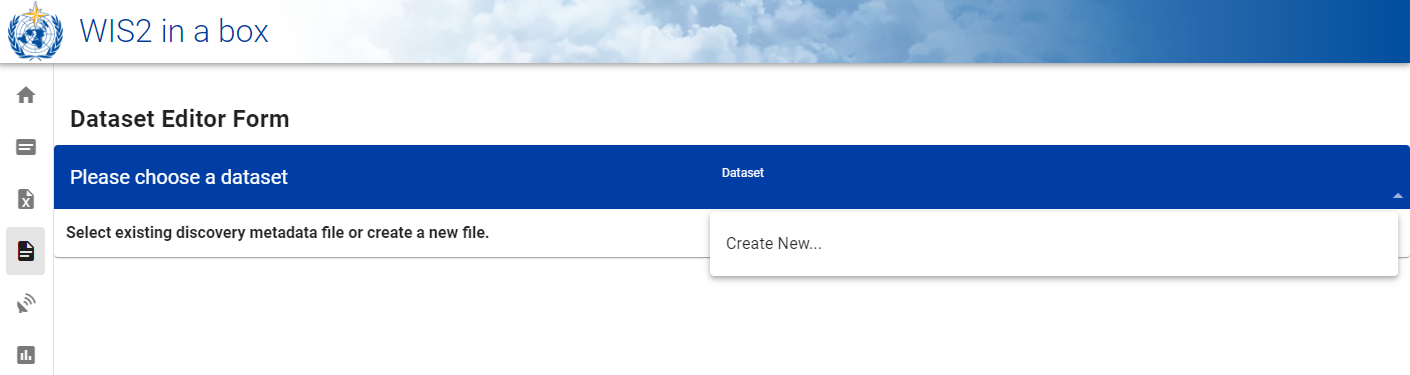

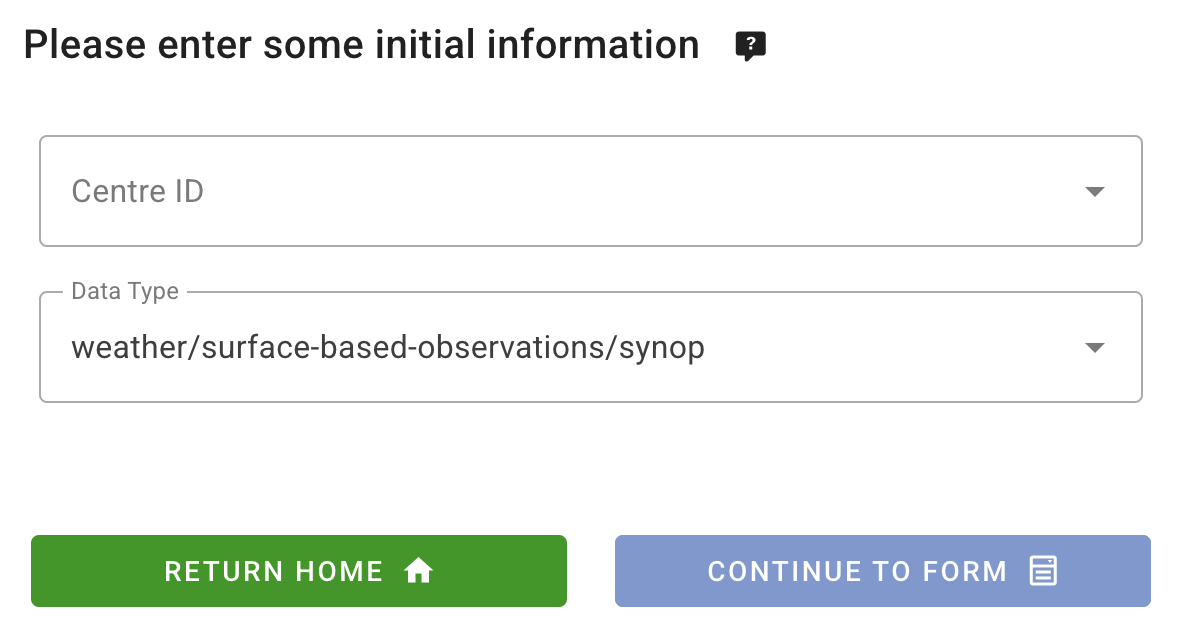

Creating a new dataset in the wis2box-webapp

Navigate to the 'dataset editor' page in the wis2box-webapp of your wis2box instance by going to http://YOUR-HOST/wis2box-webapp and selecting 'dataset editor' from the menu on the left hand side.

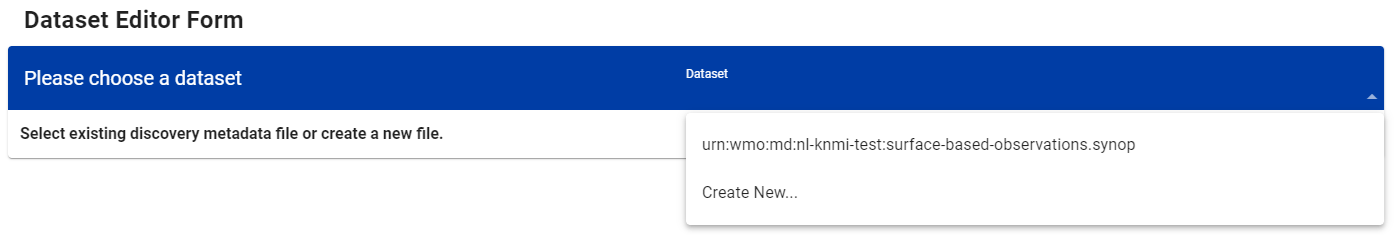

On the 'dataset editor' page, under the 'Datasets' tab, click on "Create New ...":

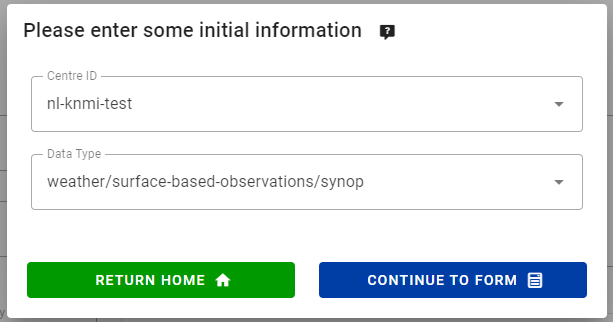

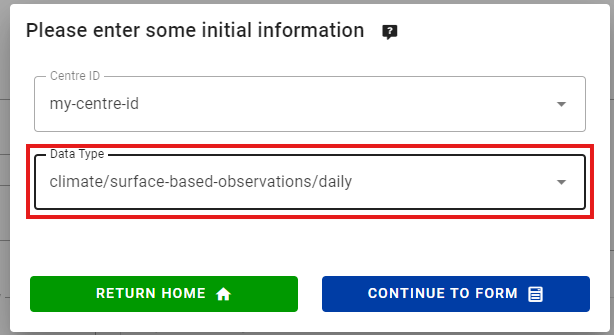

A pop-up window will appear, asking you to provide:

- Centre ID : this is the agency acronym (in lower case and no spaces), as specified by the WMO Member, that identifies the data centre responsible for publishing the data.

- Data Type: The type of data you are creating metadata for. You can choose between using a predefined template or selecting 'other'. If 'other' is selected, more fields will have to be manually filled.

Centre ID

Your centre-id should start with the TLD of your country, followed by a dash (-) and an abbreviated name of your organization (for example fr-meteofrance). The centre-id must be lowercase and use alphanumeric characters only. The dropdown list shows all currently registered centre-ids on WIS2 as well as any centre-id you have already created in wis2box.

Data Type Templates

The Data Type field allows you to select from a list of templates available in the wis2box-webapp dataset editor. A template will pre-populate the form with suggested default values appropriate for the data type. This includes suggested title and keywords for the metadata and pre-configured data plugins. The topic will be fixed to the default topic for the data type.

For the purpose of the training we will use the weather/surface-based-observations/synop data type which includes data plugins that ensure the data is transformed into BUFR format before being published.

If you want to publish CAP alerts using wis2box, use the template weather/advisories-warnings. This template includes a data plugin that verifies the input data is a valid CAP alert before publishing. To create CAP alerts and publish them via wis2box you can use CAP Composer.

Please choose a centre-id appropriate for your organization.

For Data Type, select weather/surface-based-observations/synop:

Click continue to form to proceed, you will now be presented with the Dataset Editor Form.

Since you selected the weather/surface-based-observations/synop data type, the form will be pre-populated with some initial values related to this data type.

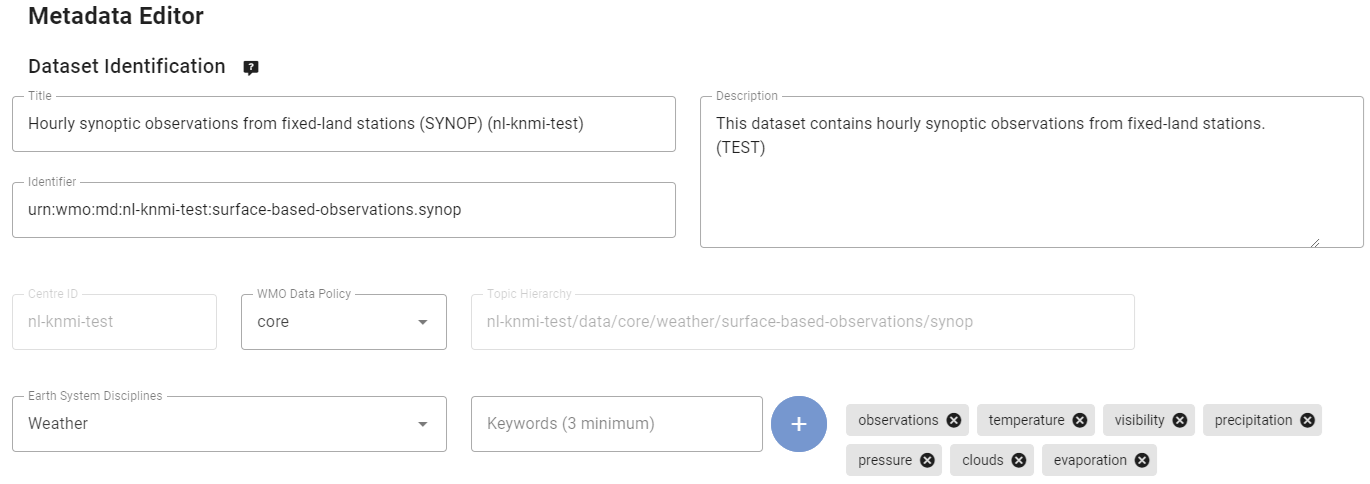

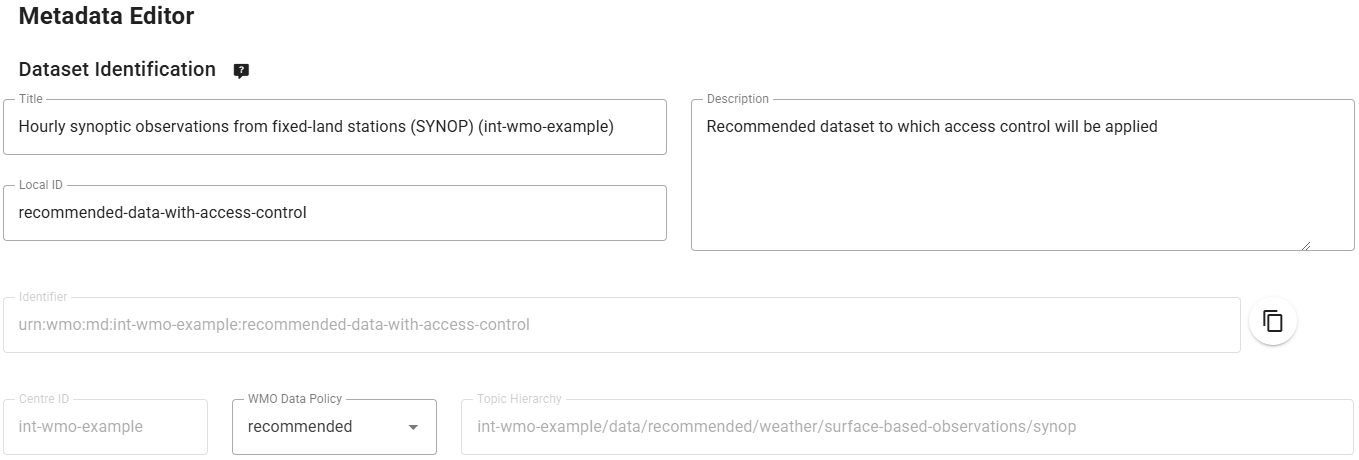

Creating discovery metadata

The Dataset Editor Form allows you to provide the Discovery Metadata for your dataset that the wis2box-management container will use to publish a WCMP2 record.

Since you have selected the 'weather/surface-based-observations/synop' data type, the form will be pre-populated with some default values.

Please make sure to replace the auto-generated 'Local ID' with a descriptive name for your dataset, e.g. 'synop-dataset-wis2training':

Review the title and keywords, and update them as necessary, and provide a description for your dataset.

Note there are options to change the 'WMO Data Policy' from 'core' to 'recommended' or to modify your default Metadata Identifier, please keep data-policy as 'core' and use the default Metadata Identifier.

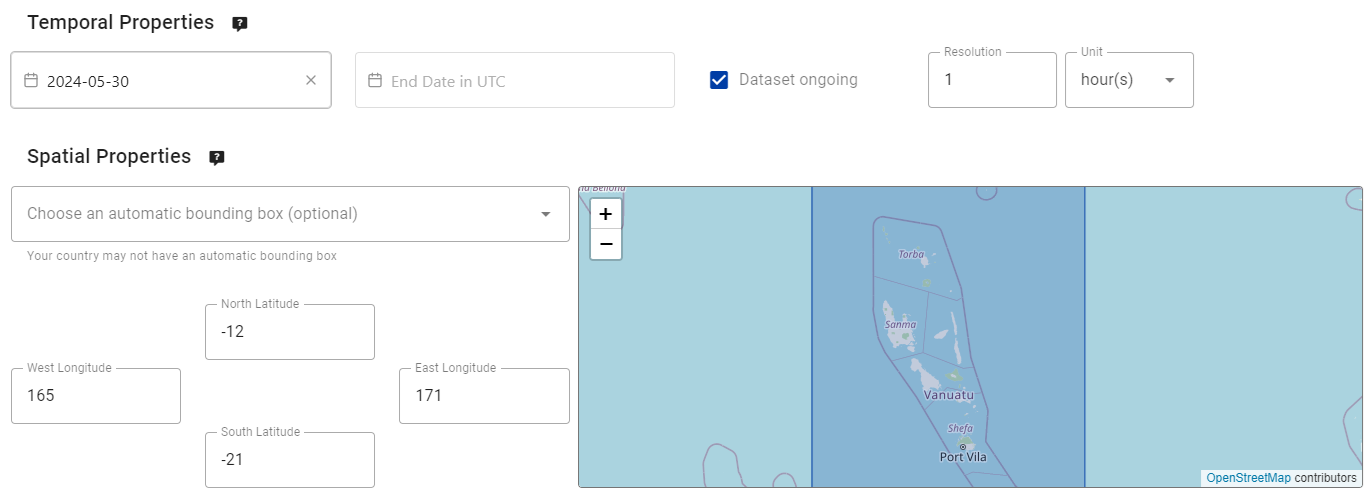

Next, review the section defining your 'Temporal Properties' and 'Spatial Properties'. You can adjust the bounding box by updating the 'North Latitude', 'South Latitude', 'East Longitude', and 'West Longitude' fields:

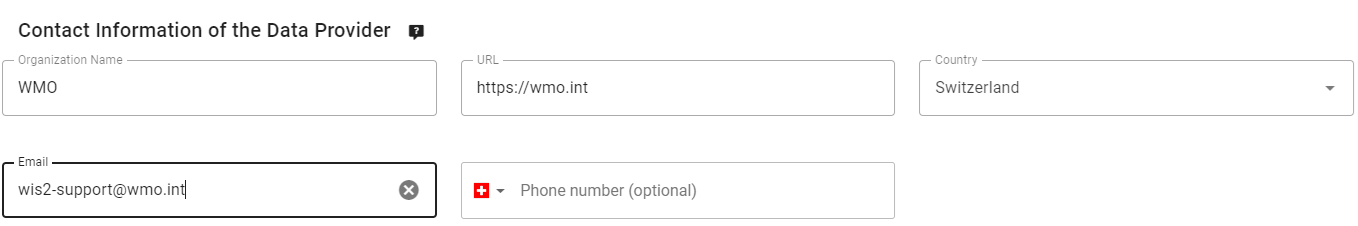

Next, fill out the section defining the 'Contact Information of the Data Provider':

Finally, fill out the section defining the 'Data Quality Information':

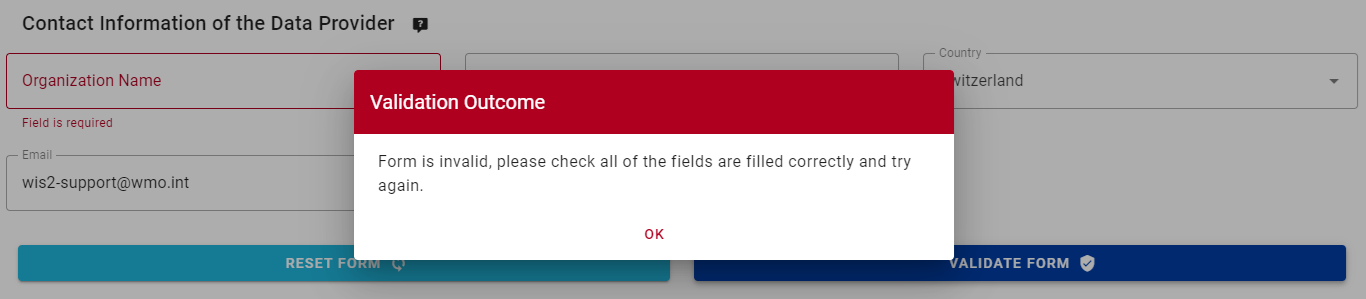

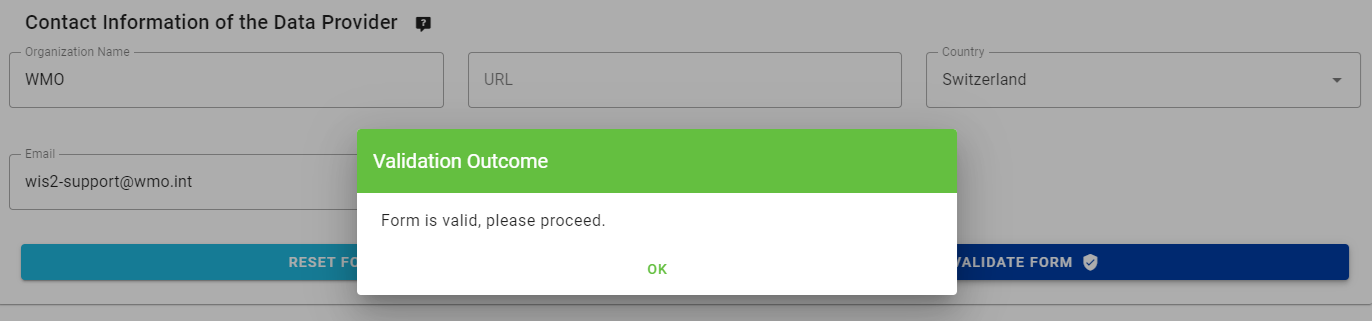

Once you are done filling out all the sections, click 'VALIDATE FORM' and check the form for any errors:

If there are any errors, correct them and click 'VALIDATE FORM' again.

Making sure you have no errors and that you get a pop-up indication your form has been validated:

Next, before submitting your dataset, review the data mappings for your dataset.

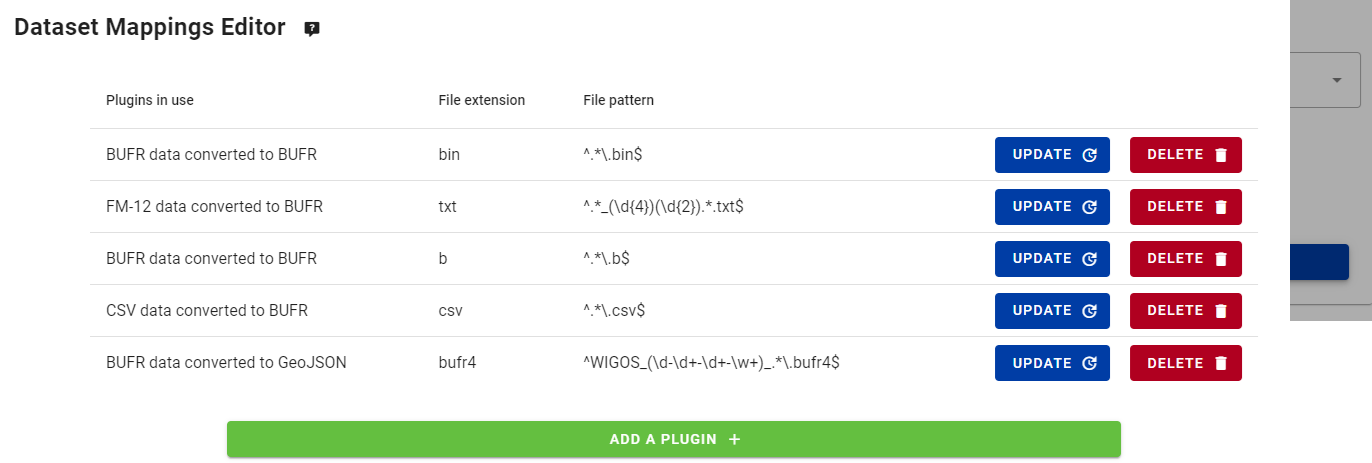

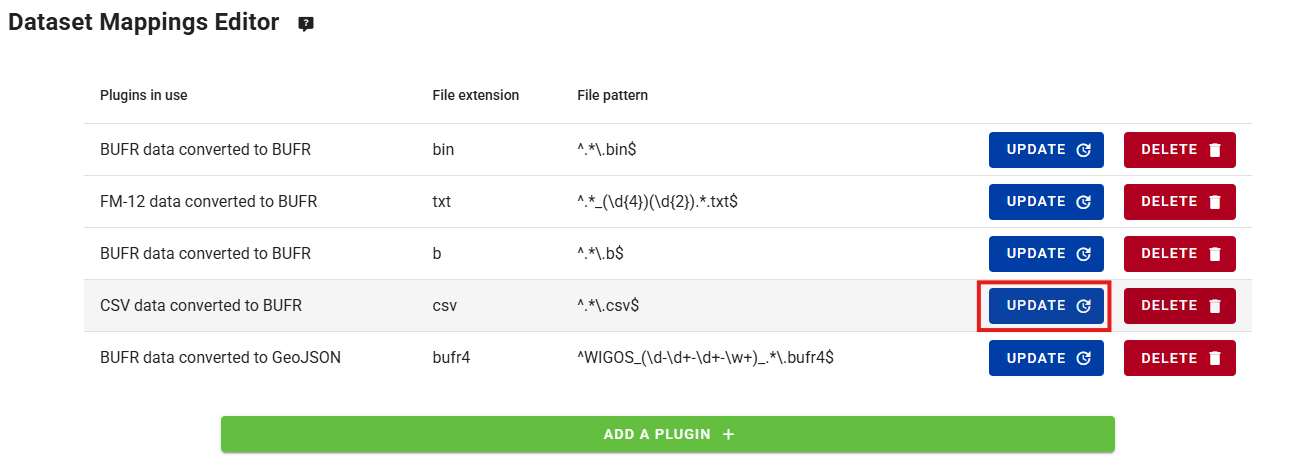

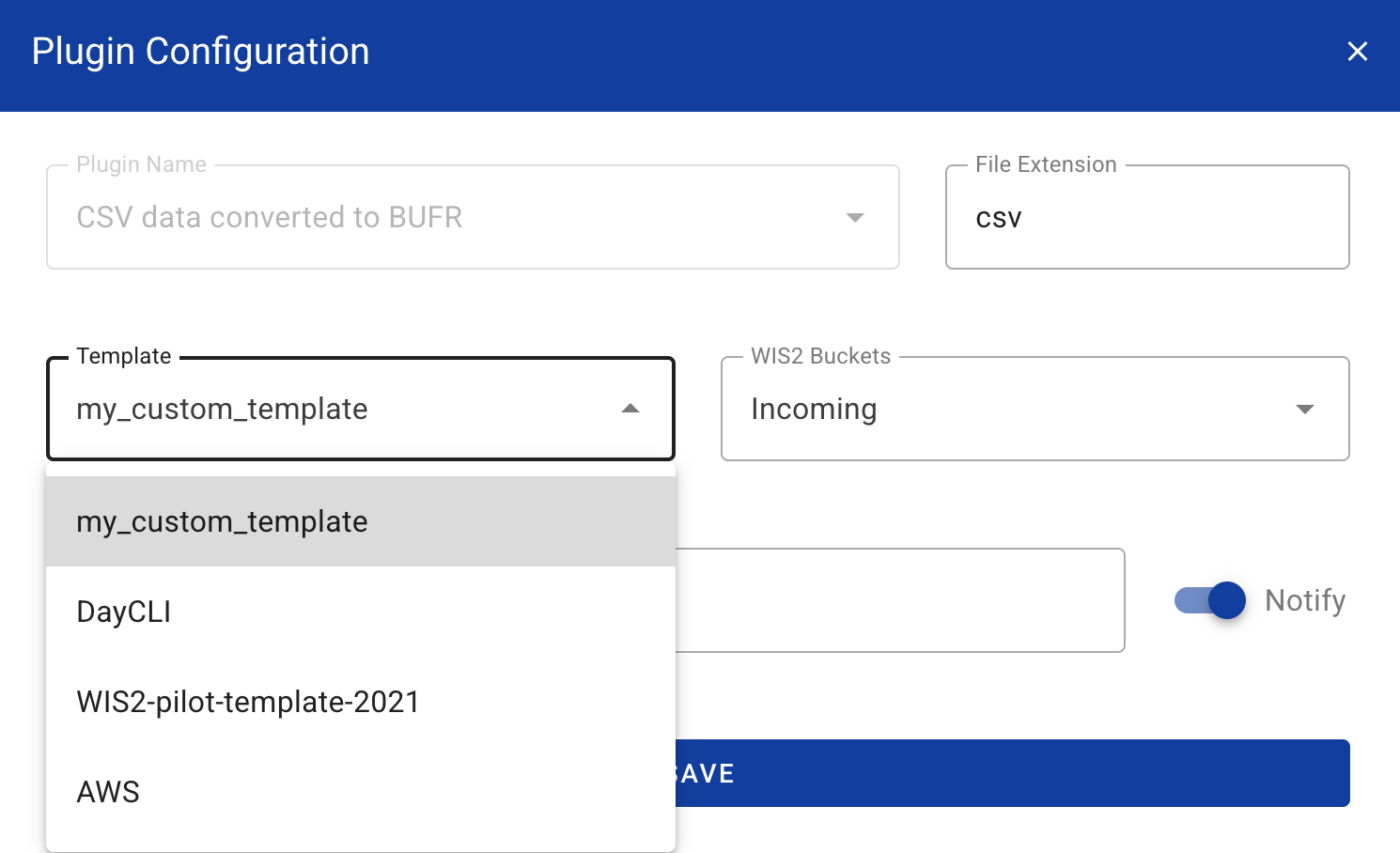

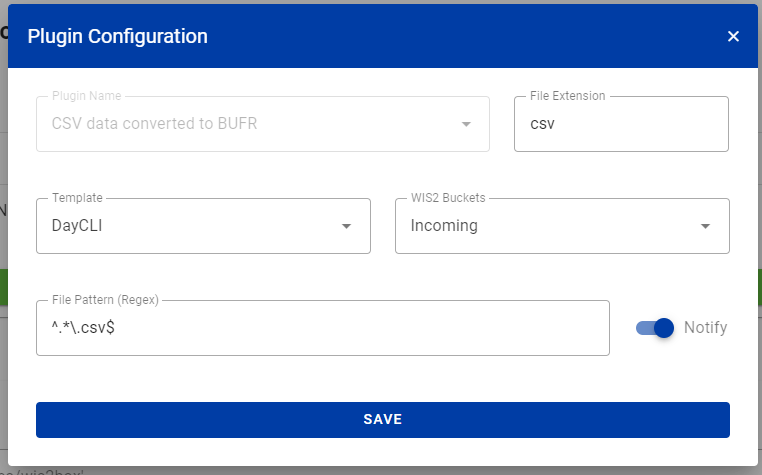

Configuring data mappings

Since you used a template to create your dataset, the dataset mappings have been pre-populated with the defaults plugins for the 'weather/surface-based-observations/synop' data type. Data plugins are used in the wis2box to transform data before it is published using the WIS2 notification.

Note that you can click on the "update"-button to change settings for the plugin such as file-extension and the file-pattern, you can leave the default settings for now. In a later session, you will learn more about BUFR and the transformation of data into BUFR format.

Submitting your dataset

Finally, you can click 'submit' to publish your dataset.

You will need to provide the authorization token for 'processes/wis2box' that you created earlier. If you have not done so, you can create a new token by following the instructions in the preparation section.

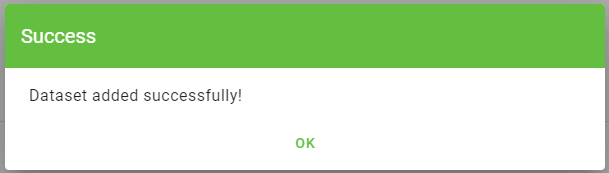

Check that you get the following message after submitting your dataset, indicating that the dataset was successfully submitted:

After you click 'OK', you are redirected to the Dataset Editor home page. Now if you click on the 'Dataset' tab, you should see your new dataset listed:

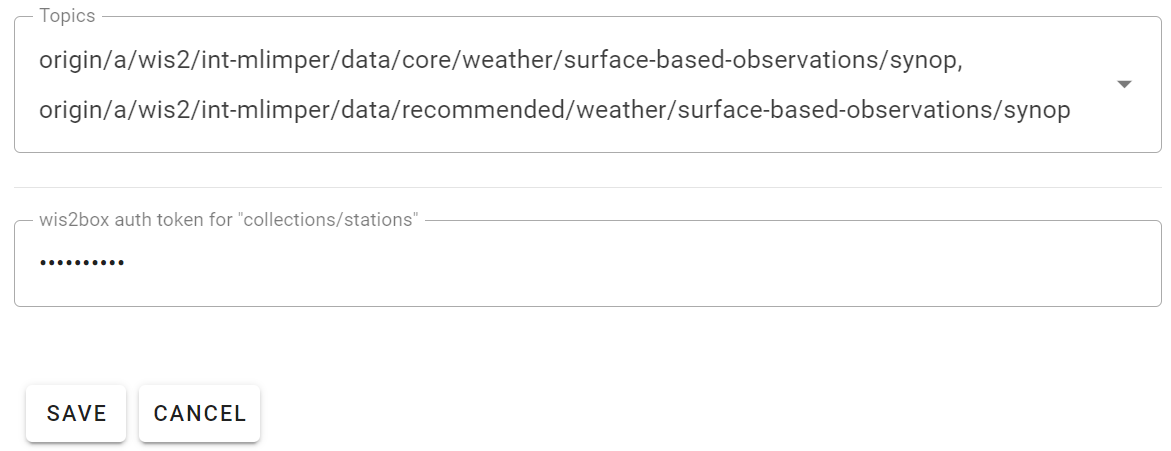

Reviewing the WIS2-notification for your discovery metadata

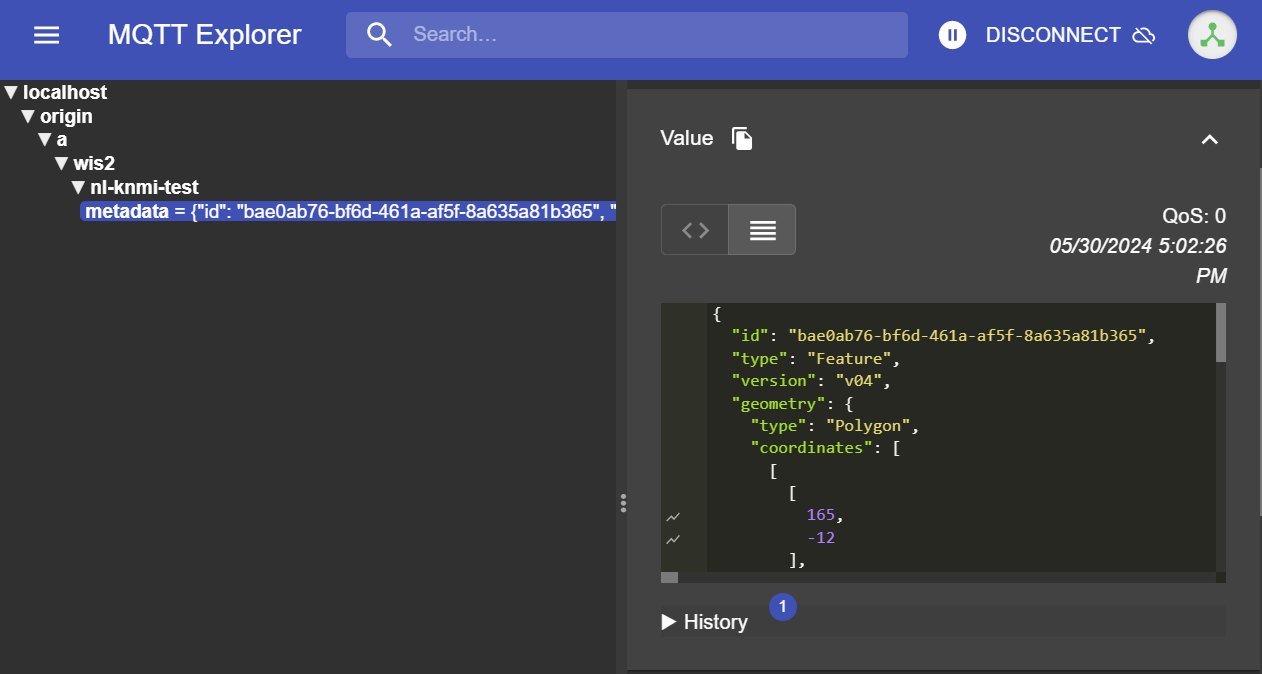

Go to MQTT Explorer, if you were connected to the broker, you should see a new WIS2 notification published on the topic origin/a/wis2/<your-centre-id>/metadata:

Inspect the content of the WIS2 notification you published. You should see a JSON with a structure corresponding to the WIS Notification Message (WNM) format.

Question

On what topic is the WIS2 notification published?

Click to reveal answer

The WIS2 notification is published on the topic origin/a/wis2/<your-centre-id>/metadata.

Question

Try to find the title, description and keywords you provided in the discovery metadata in the WIS2 notification. Can you find them?

Click to reveal answer

The title, description, and keywords you provided in the discovery metadata are not present in the WIS2 notification payload!

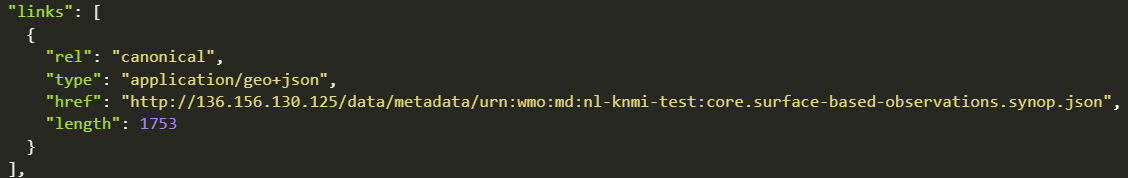

Instead, try to look for the canonical link in the "links"-section in the WIS2 notification:

The WIS2 notification contains a canonical link to the WCMP2 record that was published.

Copy-paste this canonical link into your browser to access the WCMP2 record, depending on your browser settings, you may be prompted to download the file or it may be displayed directly in your browser.

You will find the title, description, and keywords your provided inside the WCMP2 record.

Conclusion

Congratulations!

In this practical session, you learned how to:

- create a new dataset

- define your discovery metadata

- review your data mappings

- publish discovery metadata

- review the WIS2 notification for your discovery metadata

Configuring station metadata

Learning outcomes

By the end of this practical session, you will be able to:

- create an authorization token for the

collections/stationsendpoint - add station metadata to wis2box

- update/delete station metadata using the wis2box-webapp

Introduction

For sharing data internationally between WMO Members, it is important to have a common understanding of the stations that are producing the data. The WMO Integrated Global Observing System (WIGOS) provides a framework for the integration of observing systems and data management systems. The WIGOS Station Identifier (WSI) is used as the unique reference of the station which produced a specific set of observation data.

wis2box has a collection of station metadata that is used to describe the stations that are producing the observation data and should be retrieved from OSCAR/Surface. The station metadata in wis2box is used by the BUFR transformation tools to check that input data contains a valid WIGOS Station Identifier (WSI) and to provide a mapping between the WSI and the station metadata.

Create an authorization token for collections/stations

To edit stations via the wis2box-webapp you will first to need create an authorization token.

Login to your student VM and ensure you are in the wis2box directory:

cd ~/wis2box

Then login into the wis2box-management container with the following command:

python3 wis2box-ctl.py login

Within the wis2box-management container your can create an authorization token for a specific endpoint using the command: wis2box auth add-token --path <my-endpoint>.

For example, to use a random automatically generated token for the collections/stations endpoint:

wis2box auth add-token --path collections/stations

The output will look like this:

Continue with token: 7ca20386a131f0de384e6ffa288eb1ae385364b3694e47e3b451598c82e899d1 [y/N]? y

Token successfully created

Or, if you want to define your own token for the collections/stations endpoint, you can use the following example:

wis2box auth add-token --path collections/stations DataIsMagic

Output:

Continue with token: DataIsMagic [y/N]? y

Token successfully created

Please create an authorization token for the collections/stations endpoint using the instructions above.

add station metadata using the wis2box-webapp

The wis2box-webapp provides a graphical user interface to edit station metadata.

Open the wis2box-webapp in your browser by navigating to http://YOUR-HOST/wis2box-webapp, and select stations:

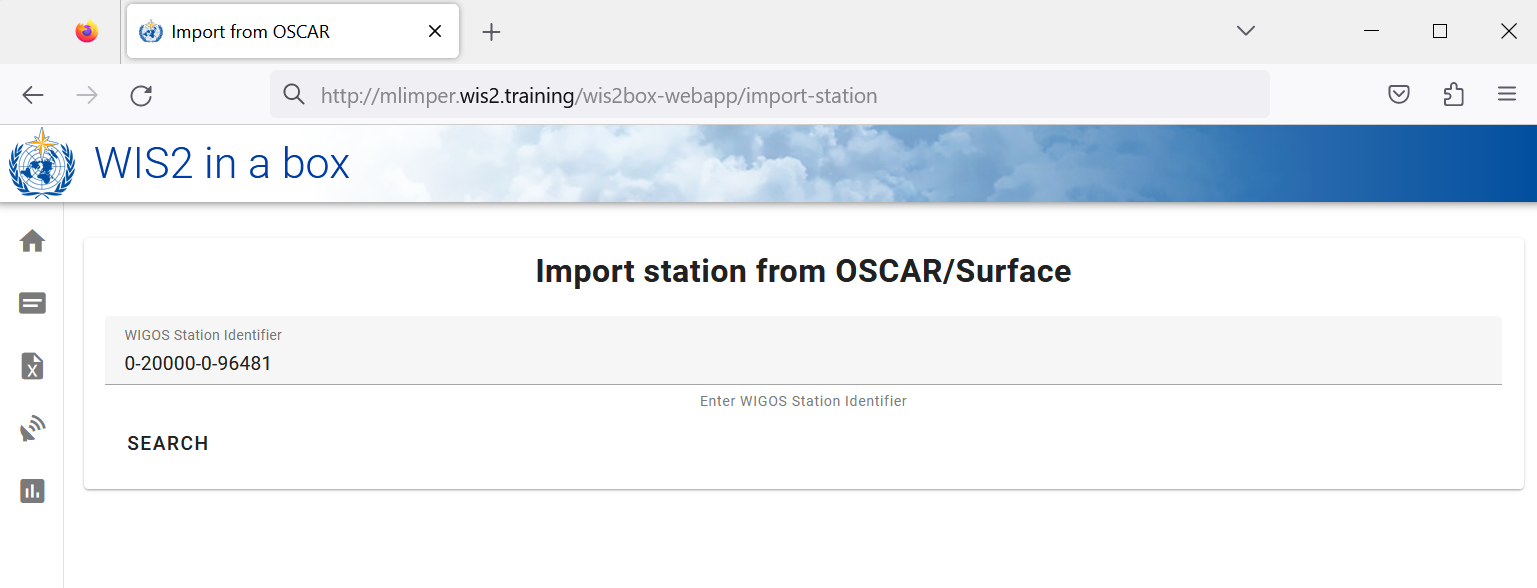

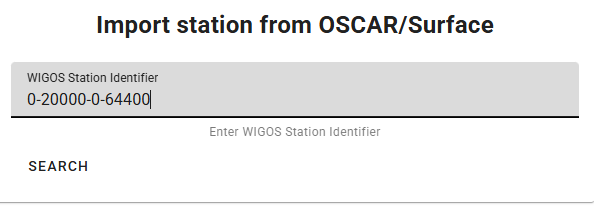

When you click add 'add new station' you are asked to provide the WIGOS station identifier for the station you want to add:

Add station metadata for 3 or more stations

Please add three or more stations to the wis2box station metadata collection of your wis2box.

Please use stations from your country if possible, especially if you brought your own data.

If your country does not have any stations in OSCAR/Surface, you can use the following stations for the purpose of this exercise:

- 0-20000-0-91334

- 0-20000-0-96323 (note missing station elevation in OSCAR)

- 0-20000-0-96749 (note missing station elevation in OSCAR)

When you click search the station data is retrieved from OSCAR/Surface, please note that this can take a few seconds.

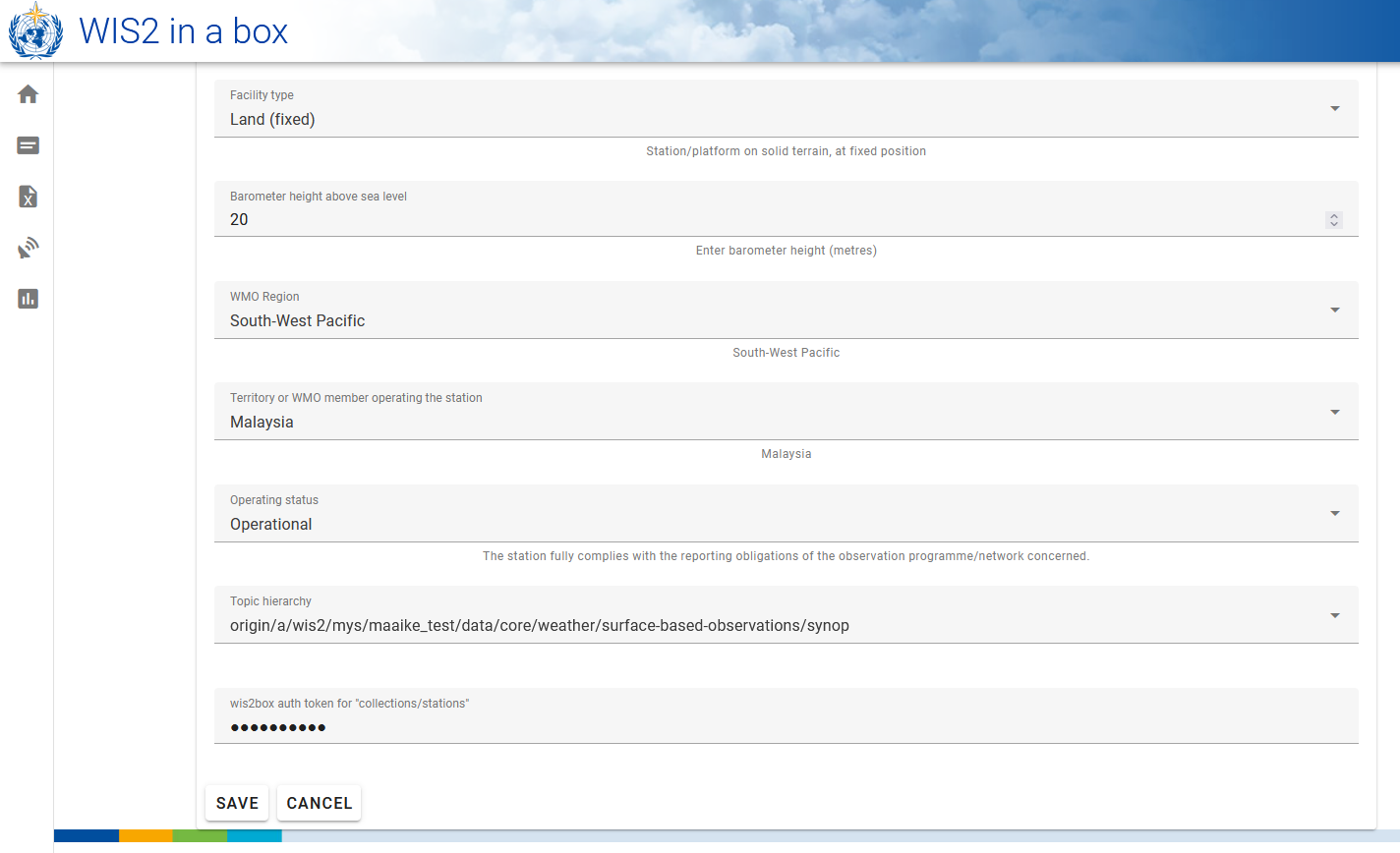

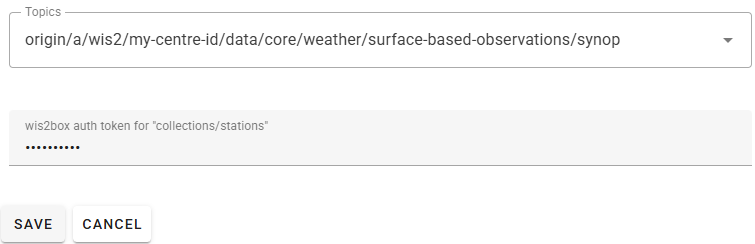

Review the data returned by OSCAR/Surface and add missing data where required. Select a topic for the station and provide your authorization token for the collections/stations endpoint and click 'save':

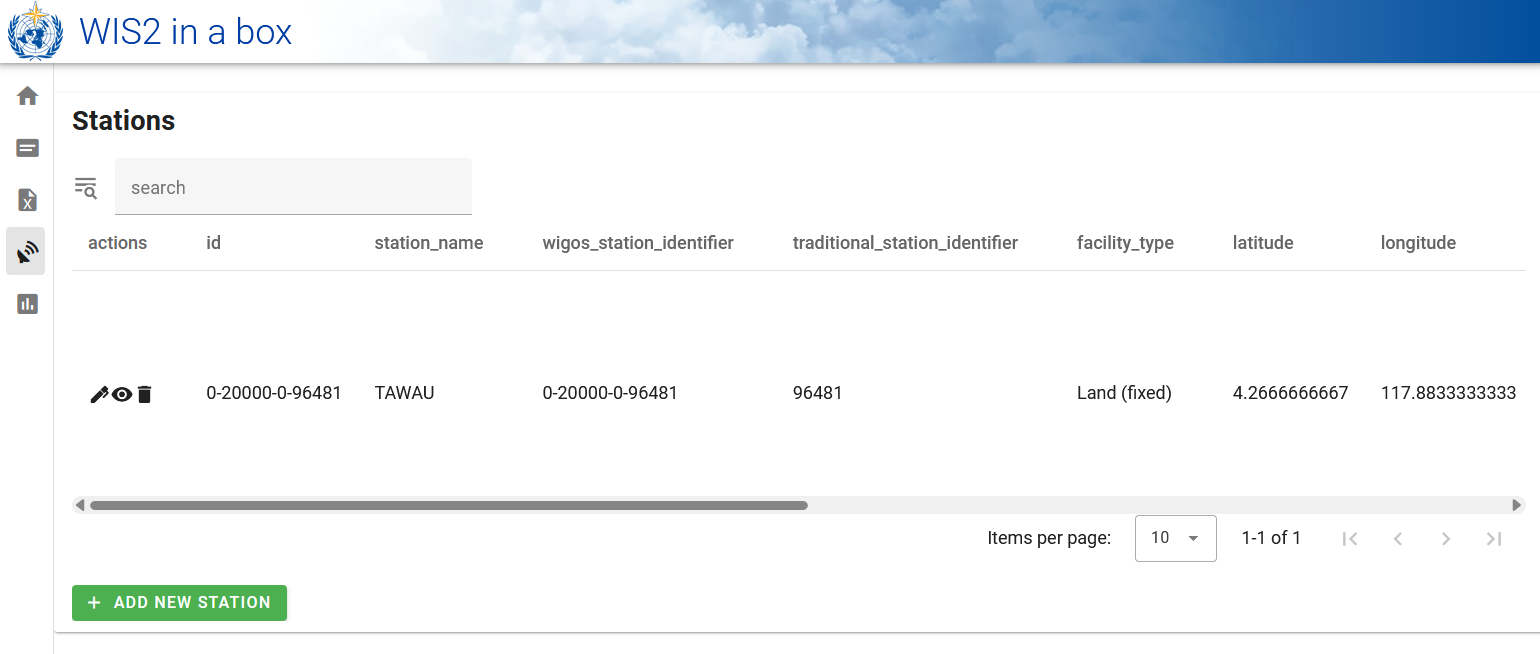

Go back to the station list and you will see the station you added:

Repeat this process until you have at least 3 stations configured.

Deriving missing elevation information

If your station elevation is missing, there are online services to help lookup the elevation using open elevation data. One such example is the Open Topo Data API.

For example, to get the elevation at latitude -6.15558 and longitude 106.84204, you can copy-paste the following URL in a new browser-tab:

https://api.opentopodata.org/v1/aster30m?locations=-6.15558,106.84204

Output:

{

"results": [

{

"dataset": "aster30m",

"elevation": 7.0,

"location": {

"lat": -6.15558,

"lng": 106.84204

}

}

],

"status": "OK"

}

Review your station metadata

The station metadata is stored in the backend of wis2box and made available via the wis2box-api.

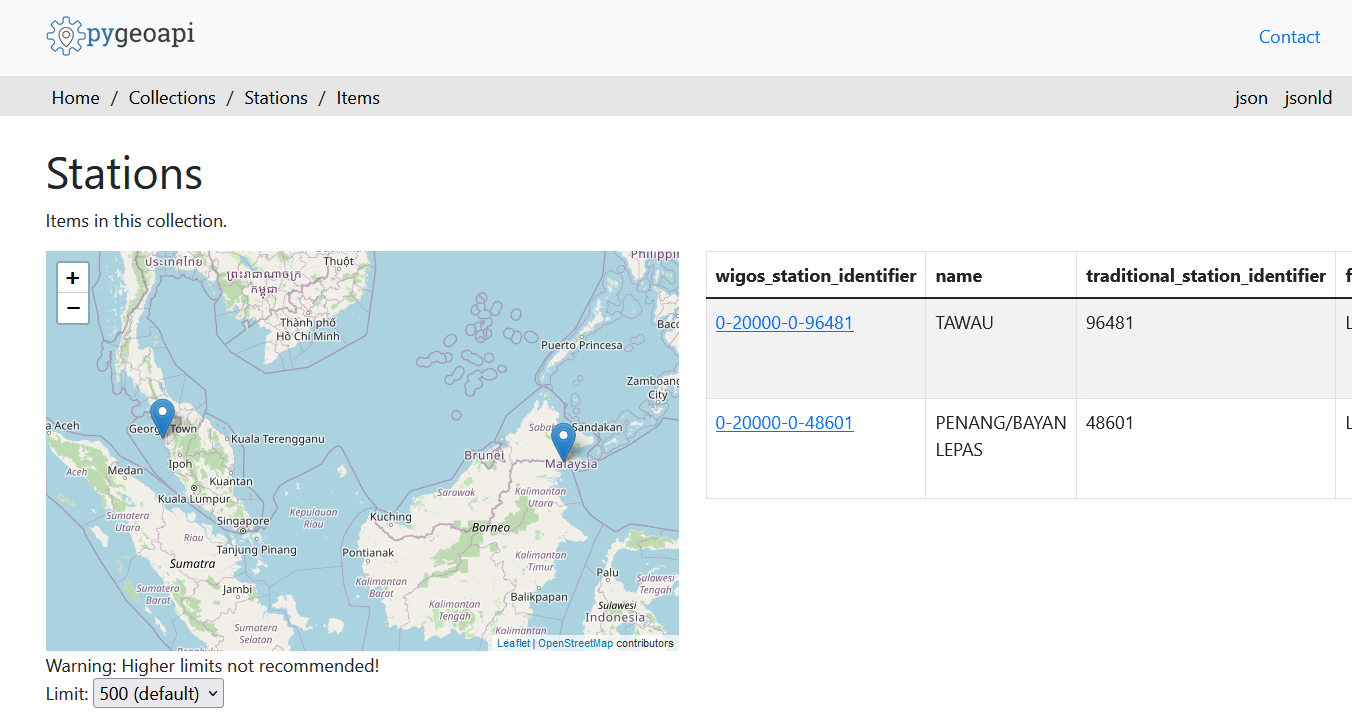

If you open a browser and navigate to http://YOUR-HOST/oapi/collections/stations/items you will see the station metadata you added:

Review your station metadata

Verify the stations you added are associated to your dataset by visiting http://YOUR-HOST/oapi/collections/stations/items in your browser.

You also have the option to view/update/delete the station in the wis2box-webapp. Note that you are required to provide your authorization token for the collections/stations endpoint to update/delete the station.

Update/delete station metadata

Try and see if you can update/delete the station metadata for one of the stations you added using the wis2box-webapp.

Bulk station metadata upload

Note that wis2box also has the ability to perform "bulk" loading of station metadata from a CSV file using the command line in the wis2box-management container.

python3 wis2box-ctl.py login

wis2box metadata station publish-collection -p /data/wis2box/metadata/station/station_list.csv -th origin/a/wis2/centre-id/weather/surface-based-observations/synop

This allows you to upload a large number of stations at once and associate them with a specific topic.

You can create the CSV file using Excel or a text editor and then upload it to wis2box-host-datadir to make it available to the wis2box-management container in the /data/wis2box/ directory.

After doing a bulk upload of stations, it is recommended to review the stations in the wis2box-webapp to ensure the data was uploaded correctly.

See the official wis2box documentation for more information on how to use this feature.

Conclusion

Congratulations!

In this practical session, you learned how to:

- create an authorization token for the

collections/stationsendpoint to be used with the wis2box-webapp - add station metadata to wis2box using the wis2box-webapp

- view/update/delete station metadata using the wis2box-webapp

Ingesting data for publication

Learning Outcomes

By the end of this practical session, you will be able to:

- Trigger the wis2box workflow by uploading data to MinIO using the command line, the MinIO web interface, SFTP, or a Python script.

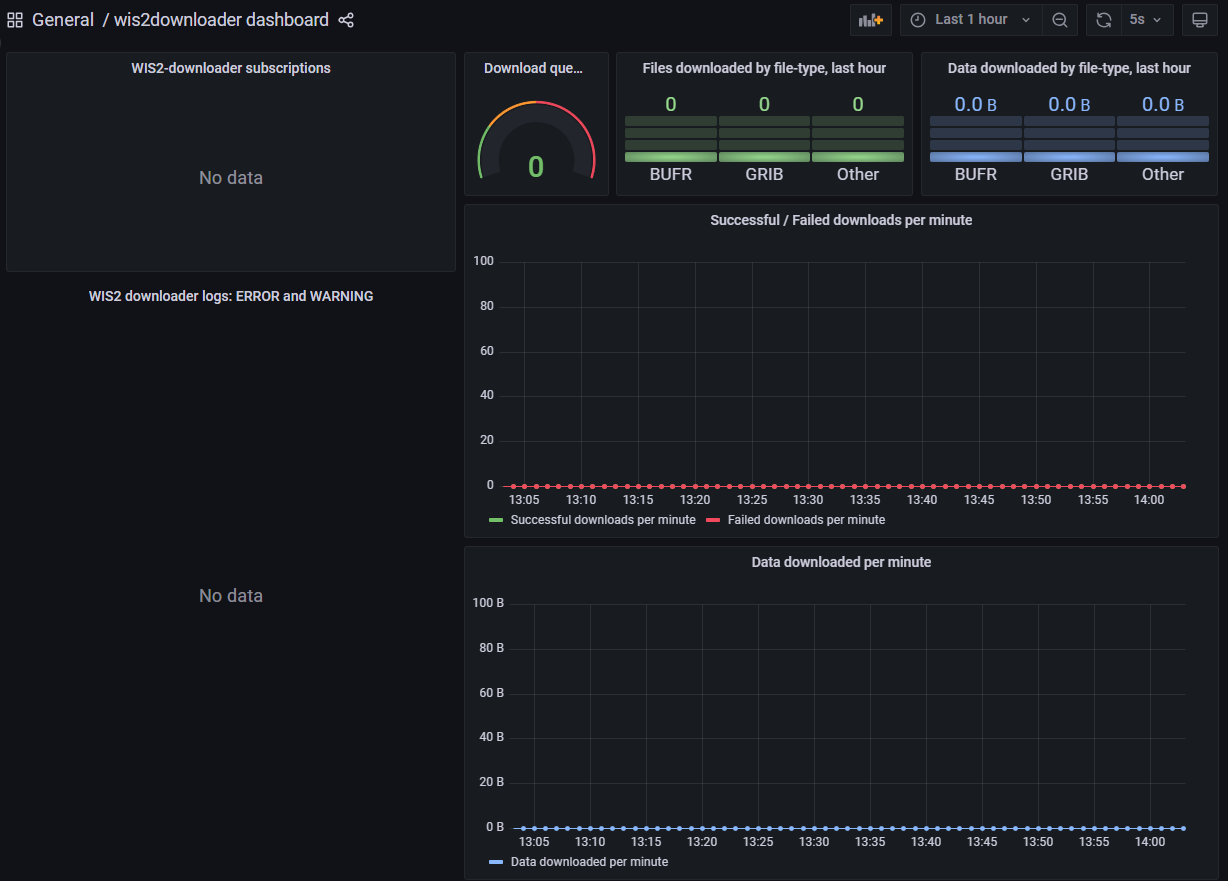

- Access the Grafana dashboard to monitor the status of data ingestion and view logs of your wis2box instance.

- View WIS2 data notifications published by your wis2box using MQTT Explorer.

Introduction

In WIS2, data is shared in real-time using WIS2 data notifications that contain a "canonical" link from which the data can be downloaded.

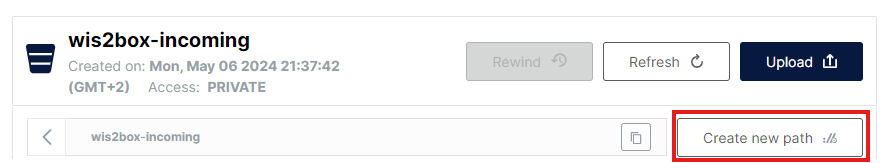

To trigger the data workflow in a WIS2 Node using the wis2box software, data must be uploaded to the wis2box-incoming bucket in MinIO, which initiates the wis2box workflow. This process results in the data being published via a WIS2 data notification. Depending on the data mappings configured in your wis2box instance, the data may be transformed into BUFR format before being published.

In this exercise, we will use sample data files to trigger the wis2box workflow and publish WIS2 data notifications for the dataset you configured in the previous practical session.

During the exercise, we will monitor the status of the data ingestion using the Grafana dashboard and MQTT Explorer. The Grafana dashboard uses data from Prometheus and Loki to display the status of your wis2box, while MQTT Explorer allows you to see the WIS2 data notifications published by your wis2box instance.

Note that wis2box will transform the example data into BUFR format before publishing it to the MQTT broker, as per the data mappings pre-configured in your dataset. For this exercise, we will focus on the different methods to upload data to your wis2box instance and verify successful ingestion and publication. Data transformation will be covered later in the Data Conversion Tools practical session.

Preparation

This section uses the dataset for "surface-based-observations/synop" previously created in the Configuring Datasets in wis2box practical session. It also requires knowledge of configuring stations in the wis2box-webapp, as described in the Configuring Station Metadata practical session.

Ensure you can log in to your student VM using your SSH client (e.g., PuTTY).

Ensure wis2box is up and running:

cd ~/wis2box/

python3 wis2box-ctl.py start

python3 wis2box-ctl.py status

Ensure MQTT Explorer is running and connected to your instance using the public credentials everyone/everyone with a subscription to the topic origin/a/wis2/#.

Ensure you have a web browser open with the Grafana dashboard for your instance by navigating to http://YOUR-HOST:3000.

Prepare Example Data

Copy the directory exercise-materials/data-ingest-exercises to the directory you defined as the WIS2BOX_HOST_DATADIR in your wis2box.env file:

cp -r ~/exercise-materials/data-ingest-exercises ~/wis2box-data/

Note

The WIS2BOX_HOST_DATADIR is mounted as /data/wis2box/ inside the wis2box-management container by the docker-compose.yml file included in the wis2box directory.

This allows you to share data between the host and the container.

Add the Test Station

Add the station with WIGOS identifier 0-20000-0-64400 to your wis2box instance using the station editor in the wis2box-webapp.

Retrieve the station from OSCAR:

Add the station to the datasets you created for publishing on "../surface-based-observations/synop" and save the changes using your authentication token:

Note that you can remove this station from your dataset after the practical session.

Testing Data Ingestion from the Command Line

In this exercise, we will use the wis2box data ingest command to upload data to MinIO.

Ensure you are in the wis2box directory and log in to the wis2box-management container:

cd ~/wis2box

python3 wis2box-ctl.py login

Verify the following sample data is available in the directory /data/wis2box/ within the wis2box-management container:

ls -lh /data/wis2box/data-ingest-exercises/synop_202412030900.txt

Ingesting Data Using wis2box data ingest

Execute the following command to ingest the sample data file into your wis2box instance:

wis2box data ingest -p /data/wis2box/data-ingest-exercises/synop_202412030900.txt --metadata-id urn:wmo:md:not-my-centre:synop-test

Was the data successfully ingested? If not, what was the error message, and how can you fix it?

Click to Reveal Answer

The data was not successfully ingested. You should see the following:

Error: metadata_id=urn:wmo:md:not-my-centre:synop-test not found in data mappings

The error message indicates that the metadata identifier you provided does not match any of the datasets you have configured in your wis2box instance.

Provide the correct metadata ID that matches the dataset you created in the previous practical session and repeat the data ingestion command until you see the following output:

Processing /data/wis2box/data-ingest-exercises/synop_202412030900.txt

Done

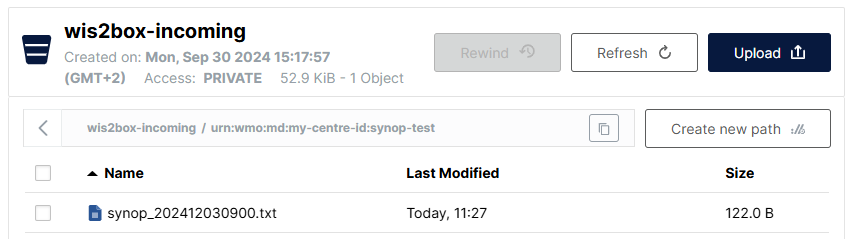

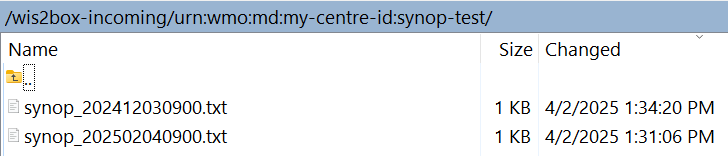

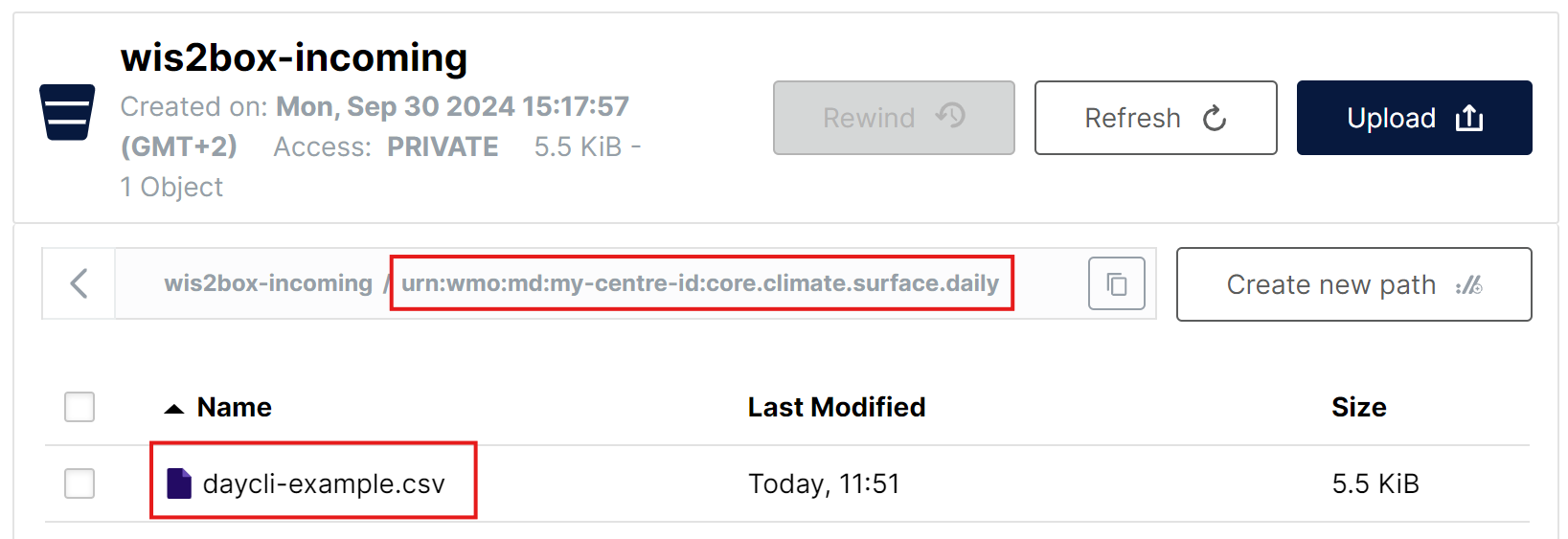

Go to the MinIO console in your browser and check if the file synop_202412030900.txt was uploaded to the wis2box-incoming bucket. You should see a new directory with the name of the dataset you provided in the --metadata-id option, and inside this directory, you will find the file synop_202412030900.txt:

Note

The wis2box data ingest command uploaded the file to the wis2box-incoming bucket in MinIO in a directory named after the metadata identifier you provided.

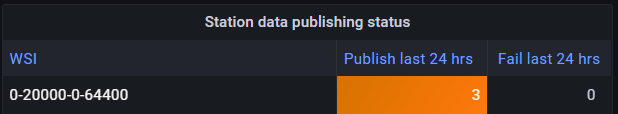

Go to the Grafana dashboard in your browser and check the status of the data ingestion.

Check the Status of the Data Ingestion on Grafana

Go to the Grafana dashboard at http://your-host:3000 and check the status of the data ingestion in your browser.

How can you see if the data was successfully ingested and published?

Click to Reveal Answer

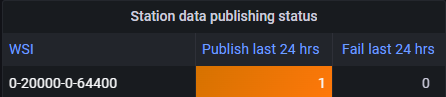

If you successfully ingested the data, you should see the following:

If you do not see this, please check for WARNING or ERROR messages displayed at the bottom of the dashboard and attempt to resolve them.

Check the MQTT Broker for WIS2 Notifications

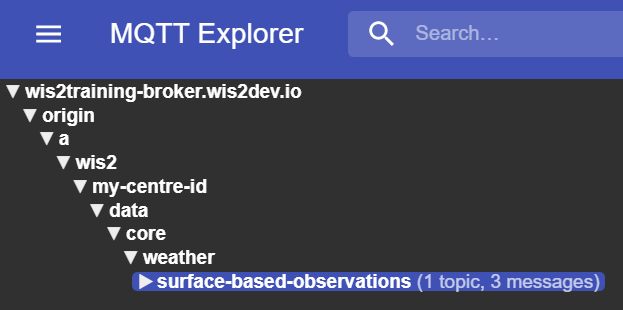

Go to the MQTT Explorer and check if you can see the WIS2 notification message for the data you just ingested.

How many WIS2 data notifications were published by your wis2box?

How do you access the content of the data being published?

Click to Reveal Answer

You should see 1 WIS2 data notification published by your wis2box.

To access the content of the data being published, you can expand the topic structure to see the different levels of the message until you reach the last level and review the message content.

The message content has a "links" section with a "rel" key of "canonical" and a "href" key with the URL to download the data. The URL will be in the format http://YOUR-HOST/data/....

Note that the data format is BUFR, and you will need a BUFR parser to view the content of the data. The BUFR format is a binary format used by meteorological services to exchange data. The data plugins inside wis2box transformed the data into BUFR before publishing it.

After completing this exercise, exit the wis2box-management container:

exit

Uploading Data Using the MinIO Web Interface

In the previous exercises, you uploaded data available on the wis2box host to MinIO using the wis2box data ingest command.

Next, we will use the MinIO web interface, which allows you to download and upload data to MinIO using a web browser.

Re-upload Data Using the MinIO Web Interface

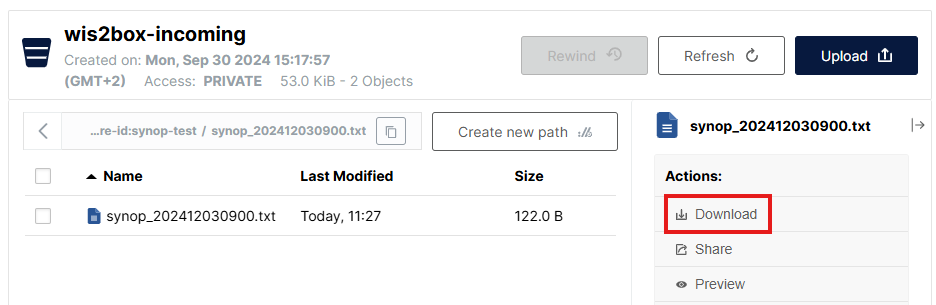

Go to the MinIO web interface in your browser and browse to the wis2box-incoming bucket. You will see the file synop_202412030900.txt you uploaded in the previous exercises.

Click on the file, and you will have the option to download it:

You can download this file and re-upload it to the same path in MinIO to re-trigger the wis2box workflow.

Check the Grafana dashboard and MQTT Explorer to see if the data was successfully ingested and published.

Click to Reveal Answer

You will see a message indicating that the wis2box already published this data:

ERROR - Data already published for WIGOS_0-20000-0-64400_20241203T090000-bufr4; not publishing

This demonstrates that the data workflow was triggered, but the data was not re-published. The wis2box will not publish the same data twice.

Upload New Data Using the MinIO Web Interface

Download this sample file synop_202502040900.txt (right-click and select "save as" to download the file).

Upload the file you downloaded using the web interface to the same path in MinIO as the previous file.

Did the data ingest and publish successfully?

Click to Reveal Answer

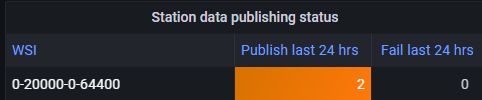

Go to the Grafana dashboard and check if the data was successfully ingested and published.

If you use the wrong path, you will see an error message in the logs.

If you use the correct path, you will see one more WIS2 data notification published for test station 0-20000-0-64400, indicating that the data was successfully ingested and published.

Uploading Data Using SFTP

The MinIO service in wis2box can also be accessed over SFTP. The SFTP server for MinIO is bound to port 8022 on the host (port 22 is used for SSH).

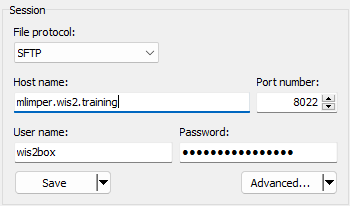

In this exercise, we will demonstrate how to use WinSCP to upload data to MinIO using SFTP.

You can set up a new WinSCP connection as shown in this screenshot:

The credentials for the SFTP connection are defined by WIS2BOX_STORAGE_USERNAME and WIS2BOX_STORAGE_PASSWORD in your wis2box.env file and are the same as the credentials you used to connect to the MinIO UI.

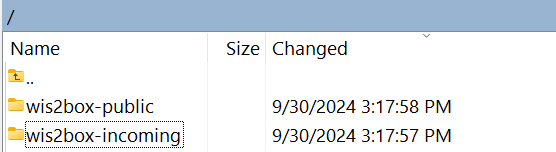

When you log in, you will see the buckets used by wis2box in MinIO:

You can navigate to the wis2box-incoming bucket and then to the folder for your dataset. You will see the files you uploaded in the previous exercises:

Upload Data Using SFTP

Download this sample file to your local computer:

synop_202503030900.txt (right-click and select "save as" to download the file).

Then upload it to the incoming dataset path in MinIO using your SFTP session in WinSCP.

Check the Grafana dashboard and MQTT Explorer to see if the data was successfully ingested and published.

Click to Reveal Answer

You should see a new WIS2 data notification published for the test station 0-20000-0-64400, indicating that the data was successfully ingested and published.

If you use the wrong path, you will see an error message in the logs.

Uploading Data Using a Python Script

In this exercise, we will use the MinIO Python client to copy data into MinIO.

MinIO provides a Python client, which can be installed as follows:

pip3 install minio

On your student VM, the 'minio' package for Python will already be installed.

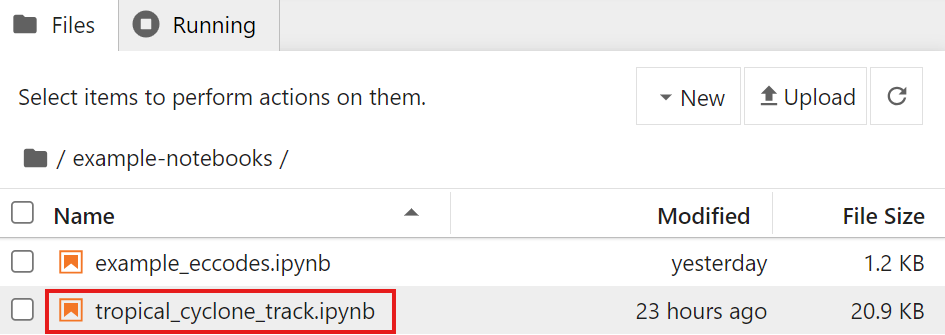

In the exercise-materials/data-ingest-exercises directory, you will find an example script copy_file_to_incoming.py that can be used to copy files into MinIO.

Try to run the script to copy the sample data file synop_202501030900.txt into the wis2box-incoming bucket in MinIO as follows:

cd ~/wis2box-data/data-ingest-exercises

python3 copy_file_to_incoming.py synop_202501030900.txt

Note

You will get an error as the script is not configured to access the MinIO endpoint on your wis2box yet.

The script needs to know the correct endpoint for accessing MinIO on your wis2box. If wis2box is running on your host, the MinIO endpoint is available at http://YOUR-HOST:9000. The script also needs to be updated with your storage password and the path in the MinIO bucket to store the data.

Update the Script and Ingest the CSV Data

Edit the script copy_file_to_incoming.py to address the errors, using one of the following methods:

- From the command line: use the nano or vim text editor to edit the script.

- Using WinSCP: start a new connection using File Protocol SCP and the same credentials as your SSH client. Navigate into the directory wis2box-data/data-ingest-exercises and edit copy_file_to_incoming.py using the built-in text editor.

Ensure that you:

- Define the correct MinIO endpoint for your host.

- Provide the correct storage password for your MinIO instance.

- Provide the correct path in the MinIO bucket to store the data.

Re-run the script to ingest the sample data file synop_202501030900.txt into MinIO:

python3 ~/wis2box-data/ ~/wis2box-data/synop_202501030900.txt

Ensure the errors are resolved.

Once you manage to run the script successfully, you will see a message indicating that the file was copied to MinIO, and you should see data notifications published by your wis2box instance in MQTT Explorer.

You can also check the Grafana dashboard to see if the data was successfully ingested and published.

Now that the script is working, you can try to copy other files into MinIO using the same script.

Ingesting Binary Data in BUFR Format

Run the following command to copy the binary data file bufr-example.bin into the wis2box-incoming bucket in MinIO:

python3 copy_file_to_incoming.py bufr-example.bin

Check the Grafana dashboard and MQTT Explorer to see if the test data was successfully ingested and published. If you see any errors, try to resolve them.

Verify the Data Ingest

How many messages were published to the MQTT broker for this data sample?

Click to Reveal Answer

You will see errors reported in Grafana as the stations in the BUFR file are not defined in the station list of your wis2box instance.

If all stations used in the BUFR file are defined in your wis2box instance, you should see 10 messages published to the MQTT broker. Each notification corresponds to data for one station for one observation timestamp.

The plugin wis2box.data.bufr4.ObservationDataBUFR splits the BUFR file into individual BUFR messages and publishes one message for each station and observation timestamp.

Conclusion

Congratulations!

In this practical session, you learned how to:

- Trigger the wis2box workflow by uploading data to MinIO using various methods.

- Debug common errors in the data ingestion process using the Grafana dashboard and the logs of your wis2box instance.

- Monitor WIS2 data notifications published by your wis2box in the Grafana dashboard and MQTT Explorer.

Data Conversion Tools

Learning Outcomes

By the end of this practical session, you will be able to:

- Access ecCodes command-line tools within the wis2box-api container

- Use the synop2bufr tool to convert FM-12 SYNOP reports to BUFR from the command line

- Trigger synop2bufr conversion via the wis2box-webapp

- Use the csv2bufr tool to convert CSV data to BUFR from the command line

Introduction

Data published on WIS2 should meet the requirements and standards defined by the various Earth system discipline / domain expert communities. To lower the barrier to data publication for land-based surface observations, wis2box provides tools to convert data to BUFR format. These tools are available via the wis2box-api container and can be used from the command line to test the data conversion process.

The main conversions currently supported by wis2box are FM-12 SYNOP reports to BUFR and CSV data to BUFR. FM-12 data is supported as it is still widely used and exchanged in the WMO community, while CSV data is supported to allow the mapping of data produced by automatic weather stations to BUFR format.

About FM-12 SYNOP

Surface weather reports from land surface stations have historically been reported hourly or at the main (00, 06, 12, and 18 UTC) and intermediate (03, 09, 15, 21 UTC) synoptic hours. Prior to the migration to BUFR, these reports were encoded in the plain text FM-12 SYNOP code form. While the migration to BUFR was scheduled to be complete by 2012, a large number of reports are still exchanged in the legacy FM-12 SYNOP format. Further information on the FM-12 SYNOP format can be found in the WMO Manual on Codes, Volume I.1 (WMO-No. 306, Volume I.1).

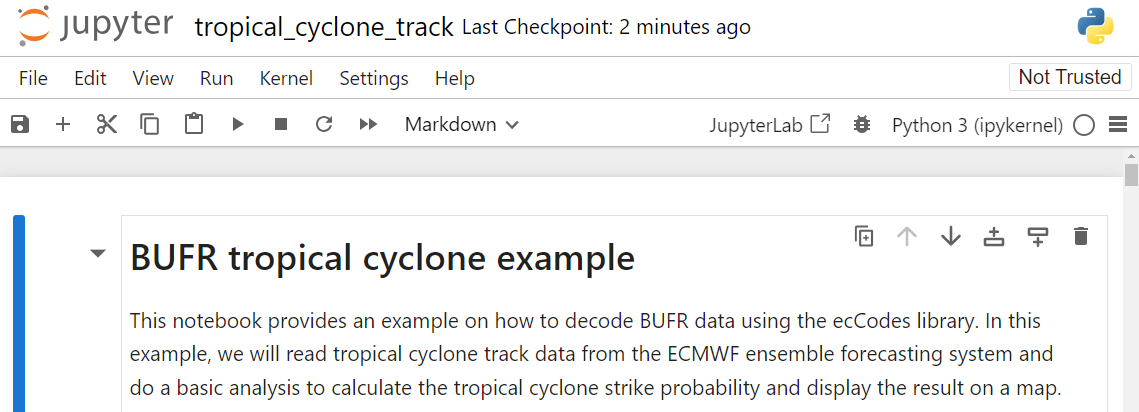

About ecCodes

The ecCodes library is a set of software libraries and utilities designed to decode and encode meteorological data in the GRIB and BUFR formats. It is developed by the European Centre for Medium-Range Weather Forecasts (ECMWF), see the ecCodes documentation for more information.

The wis2box software includes the ecCodes library in the base image of the wis2box-api container. This allows users to access the command-line tools and libraries from within the container. The ecCodes library is used within the wis2box-stack to decode and encode BUFR messages.

About csv2bufr and synop2bufr

In addition to ecCodes, the wis2box uses the following Python modules that work with ecCodes to convert data to BUFR format:

- synop2bufr: to support the legacy FM-12 SYNOP format traditionally used by manual observers. The synop2bufr module relies on additional station metadata to encode additional parameters in the BUFR file. See the synop2bufr repository at GitHub

- csv2bufr: to enable the conversion of CSV-extract produced by automated weather stations to BUFR format. The csv2bufr module is used to convert CSV data to BUFR format using a mapping template that defines how the CSV data should be mapped to the BUFR format. See the csv2bufr repository at GitHub

These modules can be used standalone or as part of the wis2box stack.

Preparation

Prerequisites

- Ensure that your wis2box has been configured and started

- Ensure you have set up a dataset and configured at least one station in your wis2box

- Connect to the MQTT broker of your wis2box instance using MQTT Explorer

- Open the wis2box web application (

http://YOUR-HOST/wis2box-webapp) and ensure you are logged in - Open the Grafana dashboard for your instance by going to

http://YOUR-HOST:3000

To use the BUFR command-line tools, you will need to be logged in to the wis2box-api container. Unless specified otherwise, all commands should be run on this container. You will also need to have MQTT Explorer open and connected to your broker.

First, connect to your student VM via your SSH client and copy the exercise materials to the wis2box-api container:

docker cp ~/exercise-materials/data-conversion-exercises wis2box-api:/root

Then log in to the wis2box-api container and change to the directory where the exercise materials are located:

cd ~/wis2box

python3 wis2box-ctl.py login wis2box-api

cd /root/data-conversion-exercises

Confirm that the tools are available, starting with ecCodes:

bufr_dump -V

You should get the following response:

ecCodes Version 2.36.0

Next, check the synop2bufr version:

synop2bufr --version

You should get the following response:

synop2bufr, version 0.7.0

Next, check csv2bufr:

csv2bufr --version

You should get the following response:

csv2bufr, version 0.8.5

ecCodes command-line tools

The ecCodes library included in the wis2box-api container provides a number of command-line tools for working with BUFR files.

The next exercises demonstrate how to use bufr_ls and bufr_dump to check the content of a BUFR file.

bufr_ls

In this first exercise, you will use the bufr_ls command to inspect the headers of a BUFR file and determine the type of the contents of the file.

Use the following command to run bufr_ls on the file bufr-cli-ex1.bufr4:

bufr_ls bufr-cli-ex1.bufr4

You should see the following output:

bufr-cli-ex1.bufr4

centre masterTablesVersionNumber localTablesVersionNumber typicalDate typicalTime numberOfSubsets

cnmc 29 0 20231002 000000 1

1 of 1 messages in bufr-cli-ex1.bufr4

1 of 1 total messages in 1 file

Various options can be passed to bufr_ls to change both the format and header fields printed.

Question

What would be the command to list the previous output in JSON format?

You can run the command bufr_ls with the -h flag to see the available options.

Click to reveal answer

You can change the output format to JSON using the -j flag, i.e.

bufr_ls -j bufr-cli-ex1.bufr4

When run, this should give you the following output:

{ "messages" : [

{

"centre": "cnmc",

"masterTablesVersionNumber": 29,

"localTablesVersionNumber": 0,

"typicalDate": 20231002,

"typicalTime": "000000",

"numberOfSubsets": 1

}

]}

The output printed represents the values of some of the header keys in the BUFR file.

On its own, this information is not very informative, with only limited information on the file contents provided.

When examining a BUFR file, we often want to determine the type of data contained in the file and the typical date/time of the data in the file. This information can be listed using the -p flag to select the headers to output. Multiple headers can be included using a comma-separated list.

You can use the following command to list the data category, sub-category, typical date, and time:

bufr_ls -p dataCategory,internationalDataSubCategory,typicalDate,typicalTime -j bufr-cli-ex1.bufr4

Question

Execute the previous command and interpret the output using Common Code Table C-13 to determine the data category and sub-category.

What type of data (data category and sub-category) is contained in the file? What is the typical date and time for the data?

Click to reveal answer

{ "messages" : [

{

"dataCategory": 2,

"internationalDataSubCategory": 4,

"typicalDate": 20231002,

"typicalTime": "000000"

}

]}

From this, we see that:

- The data category is 2, indicating "Vertical soundings (other than satellite)" data.

- The international sub-category is 4, indicating "Upper-level temperature/humidity/wind reports from fixed-land stations (TEMP)" data.

- The typical date and time are 2023-10-02 and 00:00:00z, respectively.

bufr_dump

The bufr_dump command can be used to list and examine the contents of a BUFR file, including the data itself.

Try to run the bufr_dump command on the second example file bufr-cli-ex2.bufr4:

bufr_dump bufr-cli-ex2.bufr4

This results a JSON that can be hard to parse, try using the -p flag to output the data in plain text (key=value format):

bufr_dump -p bufr-cli-ex2.bufr4

You should will see a large number of keys as output, many of which are missing. This is typical with real world data as not all the eccodes keys are populated with reported data.

You can use the grep command to filter the output and only show the keys that are not missing. For example, to show all the keys that are not missing, you can use the following command:

bufr_dump -p bufr-cli-ex2.bufr4 | grep -v MISSING

Question

What is the pressured reduced to mean sea level reported in the BUFR file bufr-cli-ex2.bufr4?

Click to reveal answer

Using the following command:

bufr_dump -p bufr-cli-ex2.bufr4 | grep -i 'pressureReducedToMeanSeaLevel'

You should see the following output:

pressureReducedToMeanSeaLevel=105590

Question

What is the WIGOS station identifier of the station that reported the data in the BUFR file bufr-cli-ex2.bufr4?

Click to reveal answer

Using the following command:

bufr_dump -p bufr-cli-ex2.bufr4 | grep -i 'wigos'

You should see the following output:

wigosIdentifierSeries=0

wigosIssuerOfIdentifier=20000

wigosIssueNumber=0

wigosLocalIdentifierCharacter="99100"

This indicates that the WIGOS station identifier is 0-20000-0-99100.

synop2bufr conversion

Next, let's look at how to convert FM-12 SYNOP data to BUFR format using the synop2bufr module. The synop2bufr module is used to convert FM-12 SYNOP data to BUFR format. The module is installed in the wis2box-api container and can be used from the command line as follows:

synop2bufr data transform \

--metadata <station-metadata.csv> \

--output-dir <output-directory-path> \

--year <year-of-observation> \

--month <month-of-observation> \

<input-fm12.txt>

The --metadata argument is used to specify the station metadata file, that provide additional information to be encoded in the BUFR file.

The --output-dir argument is used to specify the directory where the converted BUFR files will be written. The --year and --month arguments are used to specify the year and month of the observation.

The synop2bufr module is also used in the wis2box-webapp to convert FM-12 SYNOP data to BUFR format using a web-based input form.

The next few exercises will demonstrate how the synop2bufr-module works and how to use it to convert FM-12 SYNOP data to BUFR format.

review the example SYNOP message

Inspect the example SYNOP message file for this exercise synop_message.txt:

cd /root/data-conversion-exercises

more synop_message.txt

Question

How many SYNOP reports are in this file?

Click to reveal answer

The output shows the following:

AAXX 21121

15015 02999 02501 10103 21090 39765 42952 57020 60001=

15020 02997 23104 10130 21075 30177 40377 58020 60001 81041=

15090 02997 53102 10139 21075 30271 40364 58031 60001 82046=

There are 3 SYNOP reports in the file, corresponding to 3 different stations (identified by the 5-digit traditional station identifiers: 15015, 15020, and 15090).

Note that the end of each report is marked by the = character.

review the station list

The --metadata argument requires a CSV file using a pre-defined format, a working example is provided in the file station_list.csv:

Use the following command to inspect the contents of the station_list.csv file:

more station_list.csv

Question

How many stations are listed in the station list? What are the WIGOS station identifiers of the stations?

Click to reveal answer

The output shows the following:

station_name,wigos_station_identifier,traditional_station_identifier,facility_type,latitude,longitude,elevation,barometer_height,territory_name,wmo_region

OCNA SUGATAG,0-20000-0-15015,15015,landFixed,47.7770616258,23.9404602638,503.0,504.0,ROU,europe

BOTOSANI,0-20000-0-15020,15020,landFixed,47.7356532437,26.6455501701,161.0,162.1,ROU,europe

This corresponds to the station metadata for 2 stations: for the WIGOS station identifiers 0-20000-0-15015 and 0-20000-0-15020.

convert SYNOP to BUFR

Next, use the following command to convert the FM-12 SYNOP message to BUFR format:

synop2bufr data transform --metadata station_list.csv --output-dir ./ --year 2024 --month 09 synop_message.txt

Question

How many BUFR files were created ? What does the WARNING message in the output mean?

Click to reveal answer

The output shows the following:

[WARNING] Station 15090 not found in station file

If you check the content of your directory with the ls -lh, you should see 2 new BUFR files were created: WIGOS_0-20000-0-15015_20240921T120000.bufr4 and WIGOS_0-20000-0-15020_20240921T120000.bufr4.

The warning message indicates that the station with the traditional station identifier 15090 was not found in the station list file station_list.csv. This means that the SYNOP report for this station was not converted to BUFR format.

Question

Check the content of the BUFR file WIGOS_0-20000-0-15015_20240921T120000.bufr4 using the bufr_dump command.

Can you verify the information provided in the station_list.csv file is present in the BUFR file?

Click to reveal answer

You can use the following command to check the content of the BUFR file:

bufr_dump -p WIGOS_0-20000-0-15015_20240921T120000.bufr4 | grep -v MISSING

You will note the following output:

wigosIdentifierSeries=0

wigosIssuerOfIdentifier=20000

wigosIssueNumber=0

wigosLocalIdentifierCharacter="15015"

blockNumber=15

stationNumber=15

stationOrSiteName="OCNA SUGATAG"

stationType=1

year=2024

month=9

day=21

hour=12

minute=0

latitude=47.7771

longitude=23.9405

heightOfStationGroundAboveMeanSeaLevel=503

heightOfBarometerAboveMeanSeaLevel=504

Note that this includes the data provided by the station_list.csv file.

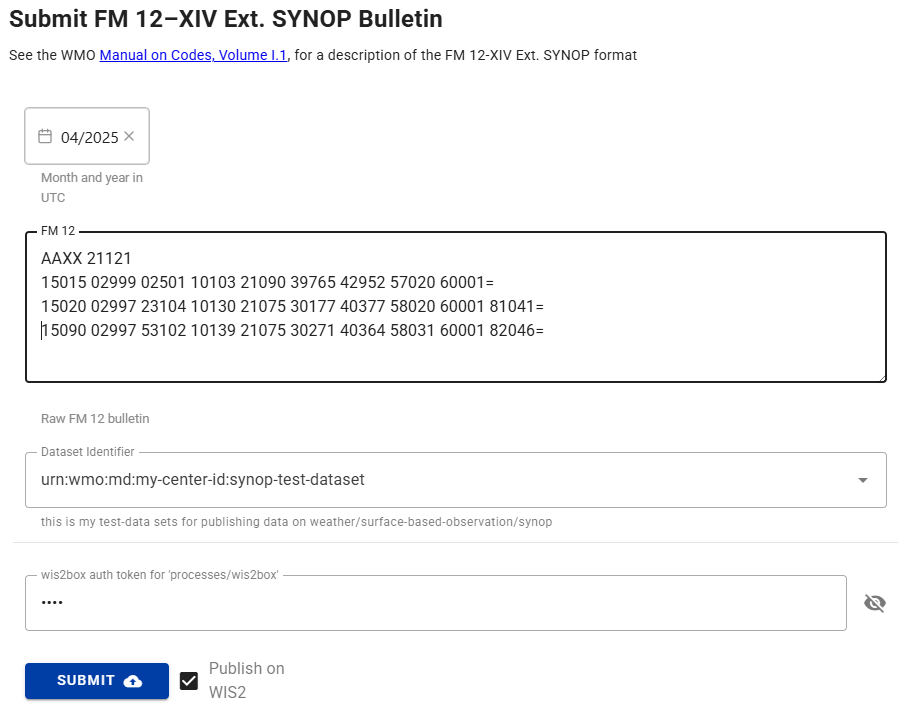

SYNOP Form in wis2box-webapp

The synop2bufr module is also used in the wis2box-webapp to convert FM-12 SYNOP data to BUFR format using a web-based input form.

To test this, go to the http://YOUR-HOST/wis2box-webapp and log in.

Select the SYNOP Form from the menu on the left and copy paste the contents of the synop_message.txt file:

AAXX 21121

15015 02999 02501 10103 21090 39765 42952 57020 60001=

15020 02997 23104 10130 21075 30177 40377 58020 60001 81041=

15090 02997 53102 10139 21075 30271 40364 58031 60001 82046=

Into the SYNOP message text area:

Question

Are you able to submit the form? What is the result?

Click to reveal answer

You need to select a dataset and provide the token for "processes/wis2box" you created in the previous exercise to submit the form.

If you provide an invalid token, you will see:

- Result: Unauthorized, please provide a valid 'processes/wis2box' token

If you provide a valid token, you will see "WARNINGS: 3". Click on the "WARNINGS" to open the dropdown which will show:

- Station 15015 not found in station file

- Station 15020 not found in station file

- Station 15090 not found in station file

To convert this data to BUFR format you would need to configure the corresponding stations in your wis2box and ensure that the stations are associated to the topic for your dataset.

Note

In the exercise for ingesting-data-for-publication you ingested the file "synop_202412030900.txt" and it was converted to BUFR format by the synop2bufr module.

In the automated workflow in the wis2box, the year and month are automatically extracted from the filename and used to populate the --year and --month arguments required by synop2bufr, while the station metadata is automatically extracted from the station configuration in the wis2box.

csv2bufr conversion

Note

Make sure you are still logged in the wis2box-api container and in the directory /root/data-conversion-exercises, if you exited the container in the previous exercise, you can log in again as follows:

cd ~/wis2box

python3 wis2box-ctl.py login wis2box-api

cd /root/data-conversion-exercises

Now let's look at how the convert CSV data to BUFR format using the csv2bufr module. The module is installed in the wis2box-api container and can be used from the command line as follows:

csv2bufr data transform \

--bufr-template <bufr-mapping-template> \

<input-csv-file>

The --bufr-template argument is used to specify the BUFR mapping template file, which provides mapping between the input CSV data and the output BUFR data is specified in a JSON file. Default mapping templates are installed in the directory /opt/csv2bufr/templates in the wis2box-api container.

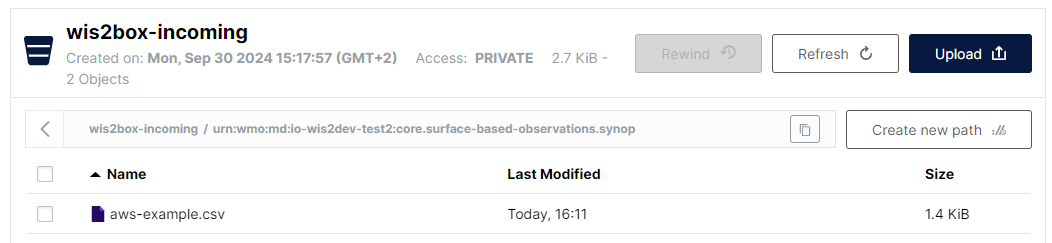

review the example CSV file

Review the content of the example CSV file aws-example.csv:

more aws-example.csv

Question

How many rows of data are in the CSV file? What is the WIGOS station identifier of the stations reporting in the CSV file?

Click to reveal answer

The output shows the following:

wsi_series,wsi_issuer,wsi_issue_number,wsi_local,wmo_block_number,wmo_station_number,station_type,year,month,day,hour,minute,latitude,longitude,station_height_above_msl,barometer_height_above_msl,station_pressure,msl_pressure,geopotential_height,thermometer_height,air_temperature,dewpoint_temperature,relative_humidity,method_of_ground_state_measurement,ground_state,method_of_snow_depth_measurement,snow_depth,precipitation_intensity,anemometer_height,time_period_of_wind,wind_direction,wind_speed,maximum_wind_gust_direction_10_minutes,maximum_wind_gust_speed_10_minutes,maximum_wind_gust_direction_1_hour,maximum_wind_gust_speed_1_hour,maximum_wind_gust_direction_3_hours,maximum_wind_gust_speed_3_hours,rain_sensor_height,total_precipitation_1_hour,total_precipitation_3_hours,total_precipitation_6_hours,total_precipitation_12_hours,total_precipitation_24_hours

0,20000,0,60355,60,355,1,2024,3,31,1,0,47.77706163,23.94046026,503,504.43,100940,101040,1448,5,298.15,294.55,80,3,1,1,0,0.004,10,-10,30,3,30,5,40,9,20,11,2,4.7,5.3,7.9,9.5,11.4

0,20000,0,60355,60,355,1,2024,3,31,2,0,47.77706163,23.94046026,503,504.43,100940,101040,1448,5,25.,294.55,80,3,1,1,0,0.004,10,-10,30,3,30,5,40,9,20,11,2,4.7,5.3,7.9,9.5,11.4